e698b32984f3fea15ef6827a9279cb346fc9227b,slm_lab/agent/algorithm/sac.py,SoftActorCritic,train,#SoftActorCritic#,118

Before Change

if self.to_train == 1:

batch = self.sample()

clock.set_batch_size(len(batch))

pdparams, v_preds = self.calc_pdparam_v(batch)

advs, v_targets = self.calc_advs_v_targets(batch, v_preds)

policy_loss = self.calc_policy_loss(batch, pdparams, advs) // from actor

After Change

// forward passes for losses

states = batch["states"]

actions = batch["actions"]

v_preds = self.calc_v(states, net=self.critic_net)

q1_preds = self.calc_q(states, actions, self.q1_net)

q2_preds = self.calc_q(states, actions, self.q2_net)

pdparams = self.calc_pdparam(states)

action_pd = policy_util.init_action_pd(self.body.ActionPD, pdparams)

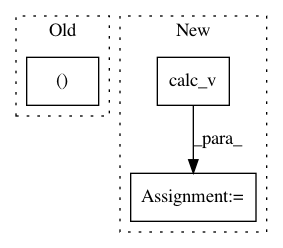

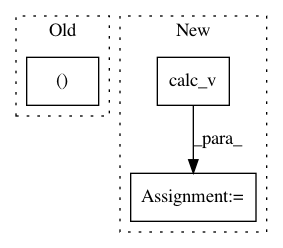

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: kengz/SLM-Lab

Commit Name: e698b32984f3fea15ef6827a9279cb346fc9227b

Time: 2019-07-31

Author: kengzwl@gmail.com

File Name: slm_lab/agent/algorithm/sac.py

Class Name: SoftActorCritic

Method Name: train

Project Name: kengz/SLM-Lab

Commit Name: bdf66a650ea78167fb50f7b72cc75338d5f2bc87

Time: 2020-03-20

Author: kengzwl@gmail.com

File Name: slm_lab/agent/algorithm/ppo.py

Class Name: PPO

Method Name: train

Project Name: kengz/SLM-Lab

Commit Name: 51975a8639d0b83544ec2f932567656b25bfc965

Time: 2018-09-02

Author: lgraesser@users.noreply.github.com

File Name: slm_lab/agent/algorithm/actor_critic.py

Class Name: ActorCritic

Method Name: calc_nstep_advs_v_targets