7c6e84bbf65bcbade78e78d6120ca0997dff7c28,examples/pendulum_sac.py,,experiment,#Any#Any#Any#Any#,67

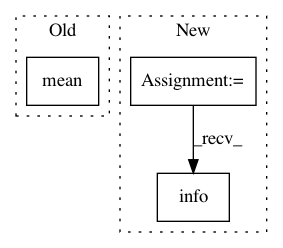

Before Change

dataset = core.evaluate(n_steps=n_steps_test, render=False)

J = compute_J(dataset, gamma)

s, *_ = parse_dataset(dataset)

print("J:", np.mean(J), "E:", agent.policy.entropy(s))

print("Press a button to visualize pendulum")

input()

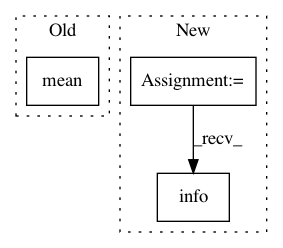

After Change

def experiment(alg, n_epochs, n_steps, n_steps_test):

np.random.seed()

logger = Logger(alg.__name__, results_dir=None)

logger.strong_line()

logger.info("Experiment Algorithm: " + alg.__name__)

// MDP

horizon = 200

gamma = 0.99

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: AIRLab-POLIMI/mushroom

Commit Name: 7c6e84bbf65bcbade78e78d6120ca0997dff7c28

Time: 2021-01-11

Author: boris.ilpossente@hotmail.it

File Name: examples/pendulum_sac.py

Class Name:

Method Name: experiment

Project Name: AIRLab-POLIMI/mushroom

Commit Name: 7c6e84bbf65bcbade78e78d6120ca0997dff7c28

Time: 2021-01-11

Author: boris.ilpossente@hotmail.it

File Name: examples/pendulum_ddpg.py

Class Name:

Method Name: experiment

Project Name: facebookresearch/Horizon

Commit Name: e3fcbb639e115e8afe9600bd06aee81acfda6704

Time: 2020-10-13

Author: czxttkl@fb.com

File Name: reagent/training/world_model/seq2reward_trainer.py

Class Name: Seq2RewardTrainer

Method Name: train