2c0f1b2d13e090f3ca1b23254cfe0391f3f3894e,tf_agents/agents/behavioral_cloning/behavioral_cloning_agent.py,BehavioralCloningAgent,_train,#BehavioralCloningAgent#Any#Any#,214

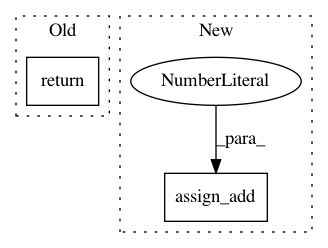

Before Change

variables_to_train=lambda: self._cloning_network.trainable_weights,

)

return loss_info

@eager_utils.future_in_eager_mode

// TODO(b/79688437): Figure out how to enable defun for Eager mode.

// @tfe.defunAfter Change

self._apply_loss(aggregated_losses, self._cloning_network.trainable_weights,

tape, self._optimizer)

self.train_step_counter.assign_add(1)

return tf_agent.LossInfo(aggregated_losses.total_loss,

BehavioralCloningLossInfo(per_example_loss))

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 2

Instances Project Name: tensorflow/agents

Commit Name: 2c0f1b2d13e090f3ca1b23254cfe0391f3f3894e

Time: 2020-08-17

Author: oars@google.com

File Name: tf_agents/agents/behavioral_cloning/behavioral_cloning_agent.py

Class Name: BehavioralCloningAgent

Method Name: _train

Project Name: asyml/texar

Commit Name: cf0cb3360a77041187c655891da4ddffbe4b13dd

Time: 2018-11-27

Author: haoranshi97@gmail.com

File Name: texar/core/optimization.py

Class Name: AdamWeightDecayOptimizer

Method Name: apply_gradients

Project Name: IndicoDataSolutions/finetune

Commit Name: af67a635b107852398e0cf4abdb5cde96f33f499

Time: 2018-09-21

Author: benlt@hotmail.co.uk

File Name: finetune/optimizers.py

Class Name:

Method Name: AdamWeightDecay