83f0a576015953b30bf8a05fc0033983e0d07354,official/nlp/bert_models.py,,pretrain_model,#Any#Any#Any#Any#,260

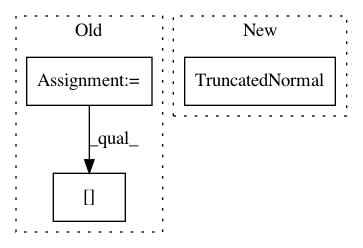

Before Change

masked_lm_ids = tf.keras.layers.Input(

shape=(max_predictions_per_seq,), name="masked_lm_ids", dtype=tf.int32)

bert_submodel_name = "bert_model"

bert_submodel = modeling.get_bert_model(

input_word_ids,

input_mask,

input_type_ids,

name=bert_submodel_name,

config=bert_config)

pooled_output = bert_submodel.outputs[0]

sequence_output = bert_submodel.outputs[1]

pretrain_layer = BertPretrainLayer(

bert_config,

bert_submodel.get_layer(bert_submodel_name),After Change

transformer_encoder = _get_transformer_encoder(bert_config, seq_length)

if initializer is None:

initializer = tf.keras.initializers.TruncatedNormal(

stddev=bert_config.initializer_range)

pretrainer_model = bert_pretrainer.BertPretrainer(

network=transformer_encoder,

num_classes=2, // The next sentence prediction label has two classes.

num_token_predictions=max_predictions_per_seq,In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: tensorflow/models

Commit Name: 83f0a576015953b30bf8a05fc0033983e0d07354

Time: 2019-11-22

Author: chendouble@google.com

File Name: official/nlp/bert_models.py

Class Name:

Method Name: pretrain_model

Project Name: pyprob/pyprob

Commit Name: 0cc04fcc2df0b2005de354a602c9a16821fa4b2f

Time: 2018-04-08

Author: atilimgunes.baydin@gmail.com

File Name: pyprob/nn.py

Class Name: ProposalPoisson

Method Name: forward

Project Name: tensorflow/models

Commit Name: f1d35b4ee525e4a2ef434414268eb050de81da7b

Time: 2019-11-11

Author: hongkuny@google.com

File Name: official/nlp/bert_models.py

Class Name:

Method Name: classifier_model