2d5e7263d01768edb91164b2918707f37b9b8568,tests/layers/test_attention.py,TestAttention,test_sample,#TestAttention#,9

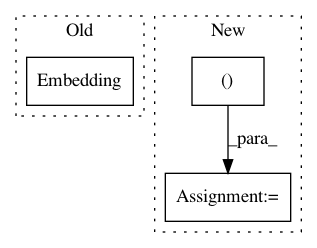

Before Change

def test_sample(self):

model = keras.models.Sequential()

model.add(keras.layers.Embedding(

input_dim=4,

output_dim=5,

mask_zero=True,

weights=[

np.asarray([

[0.1, 0.2, 0.3, 0.4, 0.5],

[0.2, 0.3, 0.4, 0.6, 0.5],

[0.4, 0.7, 0.2, 0.6, 0.9],

[0.3, 0.5, 0.8, 0.9, 0.1],

]),

],

name="Embedding",

))

model.add(Attention(name="Attention"))

model.compile(

optimizer="adam",

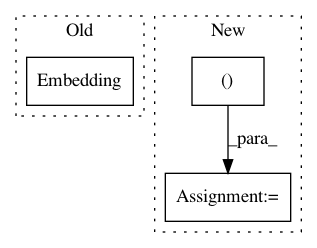

After Change

def test_sample(self):

input_layer = keras.layers.Input(

shape=(5,),

name="Input",

)

embed_layer = keras.layers.Embedding(

input_dim=4,

output_dim=5,

mask_zero=True,

weights=[

np.asarray([

[0.1, 0.2, 0.3, 0.4, 0.5],

[0.2, 0.3, 0.4, 0.6, 0.5],

[0.4, 0.7, 0.2, 0.6, 0.9],

[0.3, 0.5, 0.8, 0.9, 0.1],

]),

],

name="Embedding",

)(input_layer)

att_layer = Attention(

name="Attention",

)([embed_layer, embed_layer, embed_layer])

model = keras.models.Model(inputs=input_layer, outputs=att_layer)

model.compile(

optimizer="adam",

loss="mse",

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: CyberZHG/keras-bert

Commit Name: 2d5e7263d01768edb91164b2918707f37b9b8568

Time: 2018-11-01

Author: CyberZHG@gmail.com

File Name: tests/layers/test_attention.py

Class Name: TestAttention

Method Name: test_sample

Project Name: eriklindernoren/PyTorch-GAN

Commit Name: a4697d7e45e66a3264eb56dcf489d67d4df40d23

Time: 2018-04-23

Author: eriklindernoren@gmail.com

File Name: implementations/cgan/cgan.py

Class Name: Discriminator

Method Name: forward

Project Name: philipperemy/keras-tcn

Commit Name: 0cfe82c6beb9a28a5ff7da81b86fa0e93c388f14

Time: 2019-11-20

Author: premy@cogent.co.jp

File Name: tasks/save_reload_model.py

Class Name:

Method Name: