f5e8cbc21c5a78e2c2996e256f0f2bd5b6930f90,src/garage/tf/algos/reps.py,REPS,__init__,#REPS#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#,56

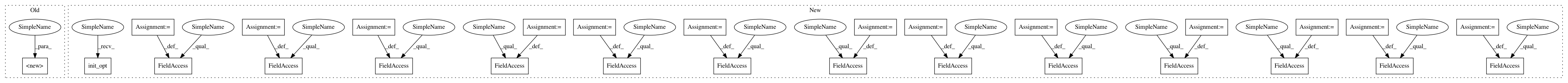

Before Change

self._l2_reg_dual = float(l2_reg_dual)

self._l2_reg_loss = float(l2_reg_loss)

super(REPS, self).__init__(env_spec=env_spec,

policy=policy,

baseline=baseline,

max_path_length=max_path_length,

discount=discount,

gae_lambda=gae_lambda,

center_adv=center_adv,

positive_adv=positive_adv,

fixed_horizon=fixed_horizon)

def init_opt(self):

Initialize the optimization procedure.

pol_loss_inputs, pol_opt_inputs, dual_opt_inputs = self._build_inputs()

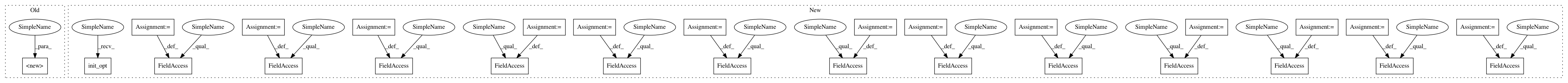

After Change

optimizer_args = optimizer_args or dict(max_opt_itr=50)

dual_optimizer_args = dual_optimizer_args or dict(maxiter=50)

self.policy = policy

self.max_path_length = max_path_length

self._env_spec = env_spec

self._baseline = baseline

self._discount = discount

self._gae_lambda = gae_lambda

self._center_adv = center_adv

self._positive_adv = positive_adv

self._fixed_horizon = fixed_horizon

self._flatten_input = True

self._name = name

self._name_scope = tf.name_scope(self._name)

self._old_policy = policy.clone("old_policy")

self._feat_diff = None

self._param_eta = None

self._param_v = None

self._f_dual = None

self._f_dual_grad = None

self._f_policy_kl = None

self._optimizer = optimizer(**optimizer_args)

self._dual_optimizer = dual_optimizer

self._dual_optimizer_args = dual_optimizer_args

self._epsilon = float(epsilon)

self._l2_reg_dual = float(l2_reg_dual)

self._l2_reg_loss = float(l2_reg_loss)

self._episode_reward_mean = collections.deque(maxlen=100)

if policy.vectorized:

self.sampler_cls = OnPolicyVectorizedSampler

else:

self.sampler_cls = BatchSampler

self.init_opt()

def init_opt(self):

Initialize the optimization procedure.

pol_loss_inputs, pol_opt_inputs, dual_opt_inputs = self._build_inputs()

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 28

Instances

Project Name: rlworkgroup/garage

Commit Name: f5e8cbc21c5a78e2c2996e256f0f2bd5b6930f90

Time: 2020-06-03

Author: ericyihc@usc.edu

File Name: src/garage/tf/algos/reps.py

Class Name: REPS

Method Name: __init__

Project Name: rlworkgroup/garage

Commit Name: f5e8cbc21c5a78e2c2996e256f0f2bd5b6930f90

Time: 2020-06-03

Author: ericyihc@usc.edu

File Name: src/garage/tf/algos/reps.py

Class Name: REPS

Method Name: __init__

Project Name: rlworkgroup/garage

Commit Name: 8fa84b36cd3bce373d3fd09e7030933c01310215

Time: 2020-06-16

Author: 44849486+maliesa96@users.noreply.github.com

File Name: src/garage/tf/algos/ddpg.py

Class Name: DDPG

Method Name: __init__

Project Name: rlworkgroup/garage

Commit Name: 8fa84b36cd3bce373d3fd09e7030933c01310215

Time: 2020-06-16

Author: 44849486+maliesa96@users.noreply.github.com

File Name: src/garage/tf/algos/td3.py

Class Name: TD3

Method Name: __init__