75982d3d634fb358be6c4fea6fd8dd705db13d9e,a00_Bert/train_bert_multi-label.py,,main,#Any#,34

Before Change

loss, per_example_loss, logits, probabilities, model = create_model(bert_config, is_training, input_ids, input_mask,

segment_ids, label_ids, num_labels,use_one_hot_embeddings)

// define train operation

num_train_steps = int(float(num_examples) / float(FLAGS.batch_size * FLAGS.num_epochs));use_tpu=False

num_warmup_steps = int(num_train_steps * 0.1)

train_op = optimization.create_optimizer(loss, FLAGS.learning_rate, num_train_steps, num_warmup_steps, use_tpu)

After Change

// define train operation

//num_train_steps = int(float(num_examples) / float(FLAGS.batch_size * FLAGS.num_epochs)); use_tpu=False; num_warmup_steps = int(num_train_steps * 0.1)

//train_op = optimization.create_optimizer(loss, FLAGS.learning_rate, num_train_steps, num_warmup_steps, use_tpu)

global_step = tf.Variable(0, trainable=False, name="Global_Step")

train_op = tf.contrib.layers.optimize_loss(loss, global_step=global_step, learning_rate=FLAGS.learning_rate,optimizer="Adam", clip_gradients=3.0)

is_training_eval=False

loss_eval, per_example_loss_eval, logits_eval, probabilities_eval, model_eval = create_model(bert_config, is_training_eval, input_ids, input_mask,

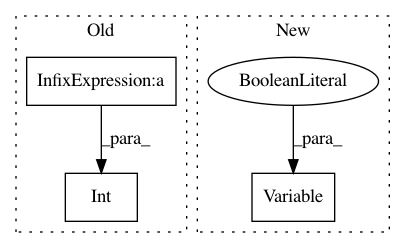

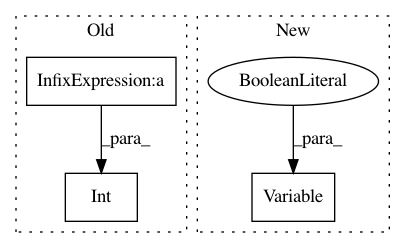

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: brightmart/text_classification

Commit Name: 75982d3d634fb358be6c4fea6fd8dd705db13d9e

Time: 2018-11-21

Author: brightmart@hotmail.com

File Name: a00_Bert/train_bert_multi-label.py

Class Name:

Method Name: main

Project Name: OpenNMT/OpenNMT-py

Commit Name: d5a75ba09ae0595ca284fd806475eb8d3f771387

Time: 2017-01-17

Author: adam.paszke@gmail.com

File Name: word_language_model/generate.py

Class Name:

Method Name: