d264e82050700d9aaed31c11dbd65f9dbd03e4d9,snips_nlu/tokenization.py,,tokenize,#Any#,11

Before Change

def tokenize(string):

return [Token(m.group(), m.start(), m.end())

for m in TOKEN_REGEX.finditer(string)]

def tokenize_light(string):

After Change

def tokenize(string):

return _tokenize(string, [WORD_REGEX, SYMBOL_REGEX])

def _tokenize(string, regexes):

non_overlapping_tokens = []

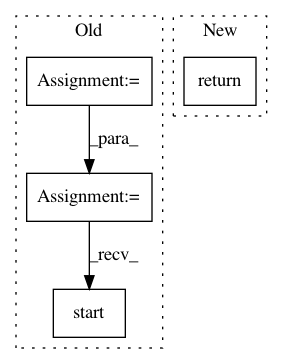

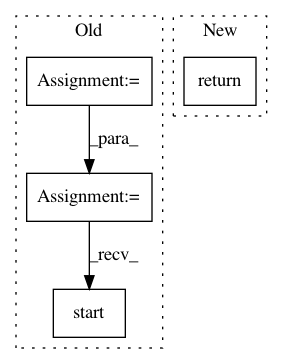

In pattern: SUPERPATTERN

Frequency: 5

Non-data size: 4

Instances

Project Name: snipsco/snips-nlu

Commit Name: d264e82050700d9aaed31c11dbd65f9dbd03e4d9

Time: 2017-04-25

Author: adrien.ball@snips.net

File Name: snips_nlu/tokenization.py

Class Name:

Method Name: tokenize

Project Name: uber/ludwig

Commit Name: 13eb6bf434f384c45ba1d92ad1dcda04fc6f0517

Time: 2020-08-22

Author: piero@uber.com

File Name: tests/integration_tests/test_collect.py

Class Name:

Method Name: _get_layers

Project Name: facebookresearch/pytext

Commit Name: fa0fea934bad76e9906c013e377ce80c77cbd5e4

Time: 2019-01-03

Author: geoffreygoh@fb.com

File Name: pytext/trainers/hogwild_trainer.py

Class Name: HogwildTrainer

Method Name: train

Project Name: streamlit/streamlit

Commit Name: c4d653ee7275f364b82d539bc58a9793248374eb

Time: 2019-06-05

Author: tconkling@gmail.com

File Name: lib/streamlit/ScriptRunner.py

Class Name: ScriptRunner

Method Name: request_rerun

Project Name: nipunsadvilkar/pySBD

Commit Name: 8917b3581159445332cafae96cf411b2cc825bdc

Time: 2020-06-09

Author: nipunsadvilkar@gmail.com

File Name: pysbd/processor.py

Class Name: Processor

Method Name: split_into_segments