4887ef8baecbf5315ec0f235e56a4f93cd05aad7,cleverhans/attacks_tf.py,,spm,#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#,1947

Before Change

tf.argmax(preds_adv, axis=-1))))

// Return the adv_x with worst accuracy

adv_xs = tf.stack(adv_xs)

accs = tf.stack(accs)

return tf.gather(adv_xs, tf.argmin(accs))

After Change

return tf.nn.softmax_cross_entropy_with_logits_v2(

labels=y, logits=preds)

all_xents = tf.map_fn(

_compute_xent,

transformed_ims,

parallel_iterations=1) // Must be 1 to avoid keras race conditions

// Return the adv_x with worst accuracy

// all_xents is n_total_samples x batch_size (SB)

all_xents = tf.stack(all_xents) // SB

// We want the worst case sample, with the largest xent_loss

worst_sample_idx = tf.argmax(all_xents, axis=0) // B

batch_size = tf.shape(x)[0]

keys = tf.stack([

tf.range(batch_size, dtype=tf.int32),

tf.cast(worst_sample_idx, tf.int32)

], axis=1)

transformed_ims_bshwc = tf.einsum("sbhwc->bshwc", transformed_ims)

after_lookup = tf.gather_nd(transformed_ims_bshwc, keys) // BHWC

return after_lookup

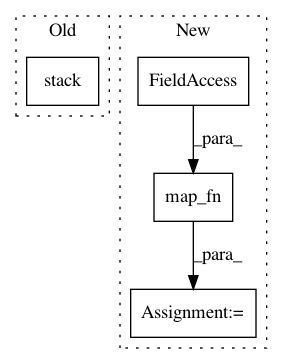

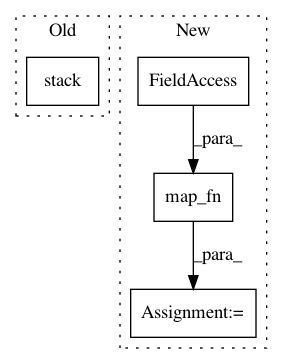

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: tensorflow/cleverhans

Commit Name: 4887ef8baecbf5315ec0f235e56a4f93cd05aad7

Time: 2018-10-04

Author: nottombrown@gmail.com

File Name: cleverhans/attacks_tf.py

Class Name:

Method Name: spm

Project Name: fgnt/nara_wpe

Commit Name: 4580d28f9cc8f846ee6fb42dda6909a98797fcd9

Time: 2018-05-16

Author: heymann@nt.upb.de

File Name: nara_wpe/tf_wpe.py

Class Name:

Method Name: wpe

Project Name: tensorflow/tpu

Commit Name: 838c6ccddbd5af98667d9585ee57e4610b361747

Time: 2018-10-18

Author: shizhiw@google.com

File Name: models/official/retinanet/retinanet_model.py

Class Name:

Method Name: add_metric_fn_inputs