6f6b0b30a04d95a26b297c219454bcd51f4f793e,rl_loop.py,,train,#Any#,71

Before Change

save_file = os.path.join(fsdb.models_dir(), new_model_name)

main.train(working_dir, [local_copy], save_file)

except:

logging.exception("Train error")

finally:

os.remove(local_copy)

After Change

time.sleep(1*60)

print("Using Golden File:", training_file)

try:

save_file = os.path.join(fsdb.models_dir(), new_model_name)

print("Training model")

dual_net.train(training_file)

print("Exporting model to ", save_file)

dual_net.export_model(working_dir, save_file)

except Exception as e:

import traceback

logging.error(traceback.format_exc())

print(traceback.format_exc())

logging.exception("Train error")

sys.exit(1)

def validate(working_dir, model_num=None, validate_name=None):

Runs validate on the directories up to the most recent model, or up to

(but not including) the model specified by `model_num`

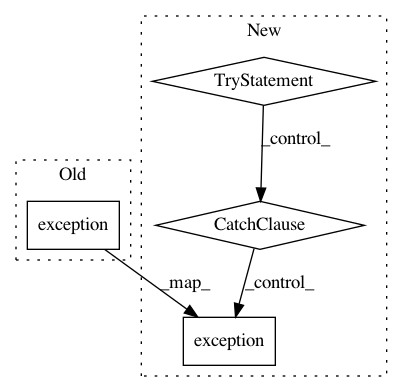

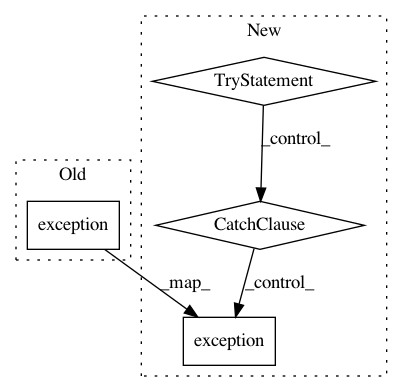

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 4

Instances

Project Name: tensorflow/minigo

Commit Name: 6f6b0b30a04d95a26b297c219454bcd51f4f793e

Time: 2018-07-15

Author: andrew.m.jackson@gmail.com

File Name: rl_loop.py

Class Name:

Method Name: train

Project Name: GoogleCloudPlatform/PerfKitBenchmarker

Commit Name: 4fae5d5226787db843ca6ee7b79c4f6d92f84d1b

Time: 2020-05-15

Author: ssabhaya@google.com

File Name: perfkitbenchmarker/scripts/object_storage_api_test_scripts/s3.py

Class Name: S3Service

Method Name: DeleteObjects

Project Name: GoogleCloudPlatform/professional-services

Commit Name: 834df48ab4c91e2a3ee31439259d6968ba7bd68d

Time: 2019-01-28

Author: pkattamuri@google.com

File Name: tools/hive-bigquery/hive_to_bigquery.py

Class Name:

Method Name: main

Project Name: GoogleCloudPlatform/professional-services

Commit Name: 238be313a5f9ab1a90106e5330bb960c4c3ff2de

Time: 2019-01-23

Author: pkattamuri@google.com

File Name: tools/hive-bigquery/hive_to_bigquery.py

Class Name:

Method Name: main