1ff8c3da9838d71d3c5fbe91dcef50b160f5660c,cleverhans/future/jax/attacks/fast_gradient_method.py,,fast_gradient_method,#Any#Any#Any#Any#Any#Any#Any#Any#,8

Before Change

if ord == np.inf:

perturbation = eps * np.sign(grads)

elif ord == 1:

abs_grads = np.abs(grads)

sign = np.sign(grads)

max_abs_grads = np.amax(abs_grads, axis=axis, keepdims=True)

tied_for_max = np.asarray(np.equal(abs_grads, max_abs_grads), dtype=np.float32)

num_ties = np.sum(tied_for_max, axis=axis, keepdims=True)

perturbation = sign * tied_for_max / num_ties

elif ord == 2:

square = np.maximum(avoid_zero_div, np.sum(np.square(grads), axis=axis, keepdims=True))

perturbation = grads / np.sqrt(square)

After Change

if ord == np.inf:

perturbation = eps * np.sign(grads)

elif ord == 1:

raise NotImplementedError("L_1 norm has not been implemented yet.")

elif ord == 2:

square = np.maximum(avoid_zero_div, np.sum(np.square(grads), axis=axis, keepdims=True))

perturbation = grads / np.sqrt(square)

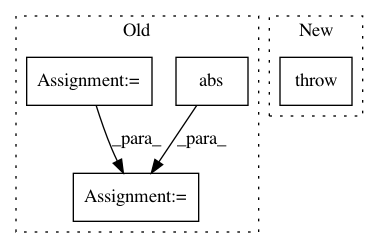

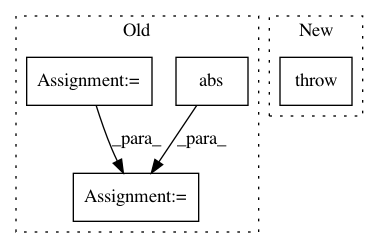

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: tensorflow/cleverhans

Commit Name: 1ff8c3da9838d71d3c5fbe91dcef50b160f5660c

Time: 2019-06-27

Author: papernot@google.com

File Name: cleverhans/future/jax/attacks/fast_gradient_method.py

Class Name:

Method Name: fast_gradient_method

Project Name: NVIDIA/sentiment-discovery

Commit Name: 81658f79c135ce6e04d89a0055d7090d5efea80e

Time: 2018-08-10

Author: raulp@dbcluster.nvidia.com

File Name: fp16/loss_scaler.py

Class Name: DynamicLossScaler

Method Name: _has_inf_or_nan

Project Name: scipy/scipy

Commit Name: 45d980c8d1adf474bdf0872a98e761136acde314

Time: 2015-02-08

Author: mclee@aftercollege.com

File Name: scipy/sparse/linalg/_norm.py

Class Name:

Method Name: norm