2d9caa8a3180a9cbccf9c4bf409e2e7a89bf045f,ml/rl/workflow/dqn_workflow.py,,main,#Any#,145

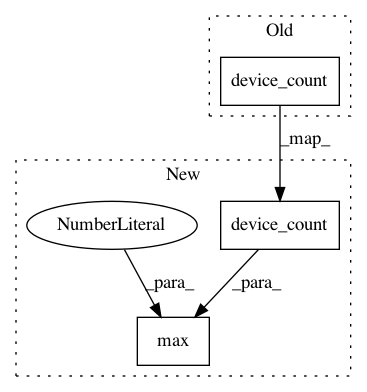

Before Change

def main(params):

if params["use_all_avail_gpus"]:

params["num_gpus"] = torch.cuda.device_count()

multiprocessing.spawn(

single_process_main, nprocs=params["num_gpus"], args=[params]

)

else:

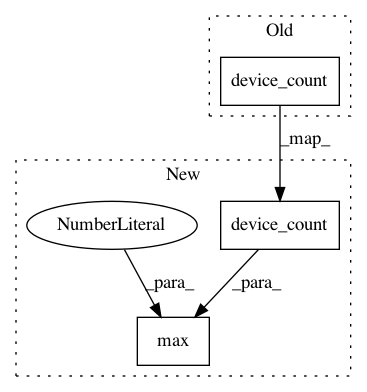

After Change

)

params["use_all_avail_gpus"] = False

if params["use_all_avail_gpus"]:

params["num_processes_per_node"] = max(1, torch.cuda.device_count())

multiprocessing.spawn(

single_process_main, nprocs=params["num_processes_per_node"], args=[params]

)

else:

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 3

Instances

Project Name: facebookresearch/Horizon

Commit Name: 2d9caa8a3180a9cbccf9c4bf409e2e7a89bf045f

Time: 2019-04-11

Author: jjg@fb.com

File Name: ml/rl/workflow/dqn_workflow.py

Class Name:

Method Name: main

Project Name: pytorch/fairseq

Commit Name: 7633129ba8d5f0e28bd6b6d6027b14352482ef31

Time: 2019-01-04

Author: myleott@fb.com

File Name: fairseq/options.py

Class Name:

Method Name: add_distributed_training_args

Project Name: facebookresearch/Horizon

Commit Name: 2d9caa8a3180a9cbccf9c4bf409e2e7a89bf045f

Time: 2019-04-11

Author: jjg@fb.com

File Name: ml/rl/workflow/parametric_dqn_workflow.py

Class Name:

Method Name: main