f1aadee66689491a6993070955abe7987b9818fc,onmt/utils/loss.py,,shards,#Any#Any#Any#,269

Before Change

// Assumed backprop"d

variables = []

for k, (v, v_split) in non_none.items():

if isinstance(v, torch.Tensor) and state[k].requires_grad:

variables.extend(zip(torch.split(state[k], shard_size),

[v_chunk.grad for v_chunk in v_split]))

inputs, grads = zip(*variables)

torch.autograd.backward(inputs, grads)

After Change

// want a sequence of dictionaries of tensors.

// First, unzip the dictionary into a sequence of keys and a

// sequence of tensor-like sequences.

keys, values = zip(*((k, reversed(v_split) )

for k, (_, v_split) in non_none.items()))

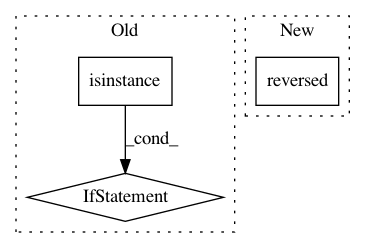

// Now, yield a dictionary for each shard. The keys are alwaysIn pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: OpenNMT/OpenNMT-py

Commit Name: f1aadee66689491a6993070955abe7987b9818fc

Time: 2019-01-23

Author: guillaumekln@users.noreply.github.com

File Name: onmt/utils/loss.py

Class Name:

Method Name: shards

Project Name: keras-team/keras

Commit Name: 65e4f94e45f32d9cbe99337d74ed9c1ebad3412a

Time: 2015-06-03

Author: jason.ramapuram@viasat.com

File Name: keras/layers/core.py

Class Name: AutoEncoder

Method Name: __init__

Project Name: mil-tokyo/webdnn

Commit Name: b697c6f3a5ba11f7507f963596d47731754cb99c

Time: 2017-04-30

Author: y.kikura@gmail.com

File Name: src/graph_builder/frontend/sub_rules/remove_last_softmax.py

Class Name: RemoveLastSoftmax

Method Name: optimize