57544b1ff9f97d4da9f64d25c8ea5a3d8d247ffc,rllib/examples/two_trainer_workflow.py,,,#,91

Before Change

default_policy=None,

execution_plan=custom_training_workflow)

tune.run(

MyTrainer,

stop={"training_iteration": args.num_iters},

config={

"rollout_fragment_length": 50,

"num_workers": 0,

"env": "multi_agent_cartpole",

"multiagent": {

"policies": policies,

"policy_mapping_fn": policy_mapping_fn,

"policies_to_train": ["dqn_policy", "ppo_policy"],

},

})

After Change

if args.as_test:

check_learning_achieved(results, args.stop_reward)

ray.shutdown()

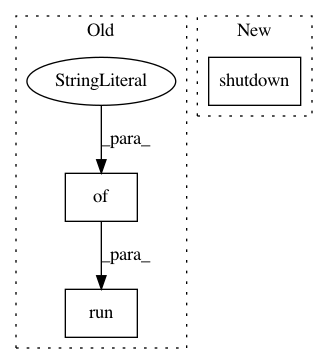

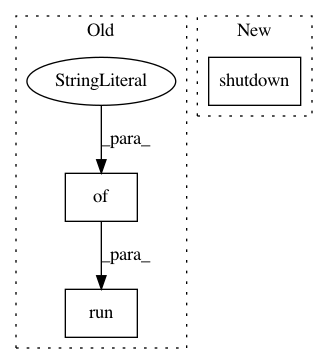

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: ray-project/ray

Commit Name: 57544b1ff9f97d4da9f64d25c8ea5a3d8d247ffc

Time: 2020-05-11

Author: sven@anyscale.io

File Name: rllib/examples/two_trainer_workflow.py

Class Name:

Method Name:

Project Name: ray-project/ray

Commit Name: 57544b1ff9f97d4da9f64d25c8ea5a3d8d247ffc

Time: 2020-05-11

Author: sven@anyscale.io

File Name: rllib/examples/eager_execution.py

Class Name:

Method Name:

Project Name: ray-project/ray

Commit Name: 57544b1ff9f97d4da9f64d25c8ea5a3d8d247ffc

Time: 2020-05-11

Author: sven@anyscale.io

File Name: rllib/examples/custom_env.py

Class Name:

Method Name: