ca907342507c1139696f542de0a3351d7a382eee,reinforcement_learning/actor_critic.py,,finish_episode,#,65

Before Change

policy_loss -= (log_prob * reward).sum()

value_loss += F.smooth_l1_loss(value, Variable(torch.Tensor([r])))

optimizer.zero_grad()

(policy_loss + value_loss).backward()

optimizer.step()

del model.rewards[:]

del model.saved_actions[:]

After Change

def finish_episode():

R = 0

saved_actions = model.saved_actions

policy_losses = []

value_losses = []

rewards = []

for r in model.rewards[::-1]:

R = r + args.gamma * R

rewards.insert(0, R)

rewards = torch.Tensor(rewards)

rewards = (rewards - rewards.mean()) / (rewards.std() + np.finfo(np.float32).eps)

for (log_prob, value), r in zip(saved_actions, rewards):

reward = r - value.data[0, 0]

policy_losses.append(-log_prob * reward)

value_losses.append(F.smooth_l1_loss(value, Variable(torch.Tensor([r]))))

optimizer.zero_grad()

loss = torch.cat(policy_losses).sum() + torch.cat(value_losses).sum()

loss.backward()

optimizer.step()

del model.rewards[:]

del model.saved_actions[:]

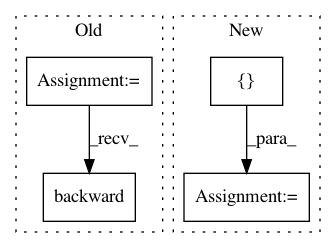

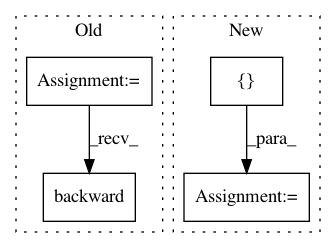

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 4

Instances

Project Name: pytorch/examples

Commit Name: ca907342507c1139696f542de0a3351d7a382eee

Time: 2017-12-04

Author: sgross@fb.com

File Name: reinforcement_learning/actor_critic.py

Class Name:

Method Name: finish_episode

Project Name: chainer/chainerrl

Commit Name: 90a55f5d4953f023b580351d33ca06c4e9d39ffa

Time: 2016-03-17

Author: muupan@gmail.com

File Name: a3c.py

Class Name: A3C

Method Name: act

Project Name: rusty1s/pytorch_geometric

Commit Name: b48c943b9f6248dbdd42d0fce44658b937098372

Time: 2017-10-23

Author: matthias.fey@tu-dortmund.de

File Name: torch_geometric/sparse/sum_test.py

Class Name: SumTest

Method Name: test_autograd

Project Name: HyperGAN/HyperGAN

Commit Name: 691e0b50d0b15665df5ec77eeec7c605c4283e1f

Time: 2020-03-08

Author: mikkel@255bits.com

File Name: hypergan/trainers/alternating_trainer.py

Class Name: AlternatingTrainer

Method Name: _step