88d079cf2cbee4aa793c9ccf09ae0e6db2c393f7,examples/bss_example.py,,,#,57

Before Change

print("Time for BSS: {:.2f} s".format(t_end - t_begin))

//// STFT Synthesis

y = pra.transform.synthesis(Y, L, L, zp_front=L//2, zp_back=L//2).T

//// Compare SDR and SIR

sdr, sir, sar, perm = bss_eval_sources(ref[:,:y.shape[1]-L//2,0], y[:,L//2:ref.shape[1]+L//2])

After Change

//// Prepare one-shot STFT

L = args.block

hop = L // 2

win_a = pra.hann(L)

win_s = pra.transform.compute_synthesis_window(win_a, hop)

//// Create a room with sources and mics

// Room dimensions in meters

room_dim = [8, 9]

// source location

source = np.array([1, 4.5])

room = pra.ShoeBox(

room_dim,

fs=16000,

max_order=15,

absorption=0.35,

sigma2_awgn=1e-8)

// get signals

signals = [ np.concatenate([wavfile.read(f)[1].astype(np.float32)

for f in source_files])

for source_files in wav_files ]

delays = [1., 0.]

locations = [[2.5,3], [2.5, 6]]

// add mic and good source to room

// add silent signals to all sources

for sig, d, loc in zip(signals, delays, locations):

room.add_source(loc, signal=np.zeros_like(sig), delay=d)

// add microphone array

room.add_microphone_array(

pra.MicrophoneArray(np.c_[[6.5, 4.49], [6.5, 4.51]], fs=room.fs)

)

// compute RIRs

room.compute_rir()

// Record each source separately

separate_recordings = []

for source, signal in zip(room.sources, signals):

source.signal[:] = signal

room.simulate()

separate_recordings.append(room.mic_array.signals)

source.signal[:] = 0.

separate_recordings = np.array(separate_recordings)

// Mix down the recorded signals

mics_signals = np.sum(separate_recordings, axis=0)

//// Monitor Convergence

ref = np.moveaxis(separate_recordings, 1, 2)

SDR, SIR = [], []

def convergence_callback(Y):

global SDR, SIR

from mir_eval.separation import bss_eval_sources

ref = np.moveaxis(separate_recordings, 1, 2)

y = pra.transform.synthesis(Y, L, hop, win=win_s)

y = y[L-hop: , :].T

m = np.minimum(y.shape[1], ref.shape[1])

sdr, sir, sar, perm = bss_eval_sources(ref[:, :m, 0], y[:, :m])

SDR.append(sdr)

SIR.append(sir)

//// STFT ANALYSIS

X = pra.transform.analysis(mics_signals.T, L, hop, win=win_a)

t_begin = time.perf_counter()

//// START BSS

bss_type = args.algo

if bss_type == "auxiva":

// Run AuxIVA

Y = pra.bss.auxiva(X, n_iter=30, proj_back=True,

callback=convergence_callback)

elif bss_type == "ilrma":

// Run ILRMA

Y = pra.bss.ilrma(X, n_iter=30, n_components=2, proj_back=True,

callback=convergence_callback)

elif bss_type == "fastmnmf":

// Run FastMNMF

Y = pra.bss.fastmnmf(X, n_iter=100, n_components=8, n_src=2,

callback=convergence_callback)

elif bss_type == "sparseauxiva":

// Estimate set of active frequency bins

ratio = 0.35

average = np.abs(np.mean(np.mean(X, axis=2), axis=0))

k = np.int_(average.shape[0] * ratio)

S = np.sort(np.argpartition(average, -k)[-k:])

// Run SparseAuxIva

Y = pra.bss.sparseauxiva(X, S, n_iter=30, proj_back=True,

callback=convergence_callback)

t_end = time.perf_counter()

print("Time for BSS: {:.2f} s".format(t_end - t_begin))

//// STFT Synthesis

y = pra.transform.synthesis(Y, L, hop, win=win_s)

//// Compare SDR and SIR

y = y[L-hop:, :].T

m = np.minimum(y.shape[1], ref.shape[1])

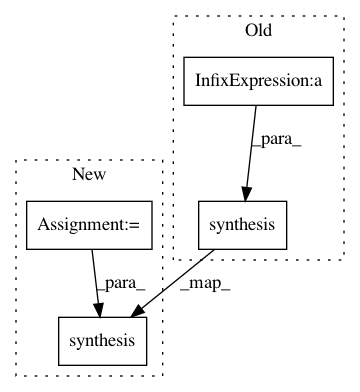

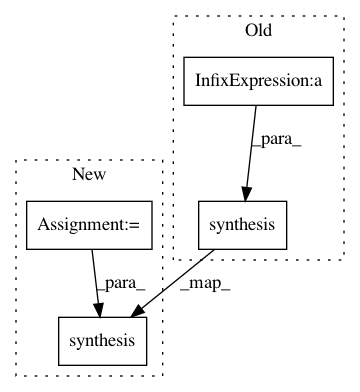

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: LCAV/pyroomacoustics

Commit Name: 88d079cf2cbee4aa793c9ccf09ae0e6db2c393f7

Time: 2019-11-05

Author: fakufaku@gmail.com

File Name: examples/bss_example.py

Class Name:

Method Name:

Project Name: LCAV/pyroomacoustics

Commit Name: 0bdc1ceeea077750a8b28eb8aacd5ed07deead3c

Time: 2019-09-27

Author: fakufaku@gmail.com

File Name: examples/bss_example.py

Class Name:

Method Name:

Project Name: epfl-lts2/pygsp

Commit Name: f259f05cd05fb34d0c2575bc3b2305faecd1dfcd

Time: 2017-08-19

Author: michael.defferrard@epfl.ch

File Name: pygsp/tests/test_filters.py

Class Name: TestCase

Method Name: _test_synthesis