e46c1ded997580101e5a5dd3ef0e6501e82f59af,tensorforce/tests/test_tutorial_code.py,TestTutorialCode,test_blogpost_introduction_runner,#TestTutorialCode#,395

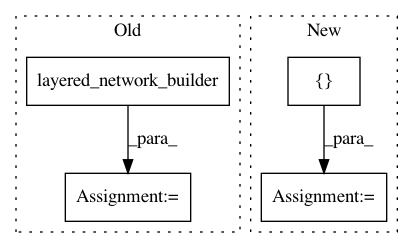

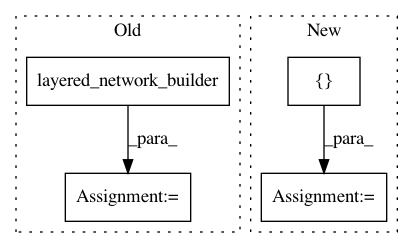

Before Change

target_update_frequency=20,

states=environment.states,

actions=environment.actions,

network=layered_network_builder(network_config)

)

agent = DQNAgent(config=agent_config)

runner = Runner(agent=agent, environment=environment)

def episode_finished(runner):

if runner.episode % 100 == 0:

After Change

from tensorforce.agents import DQNAgent

from tensorforce.execution import Runner

environment = MinimalTest(specification=[("int", ())])

network_config = [

dict(type="dense", size=32)

]

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: reinforceio/tensorforce

Commit Name: e46c1ded997580101e5a5dd3ef0e6501e82f59af

Time: 2017-10-16

Author: mi.schaarschmidt@gmail.com

File Name: tensorforce/tests/test_tutorial_code.py

Class Name: TestTutorialCode

Method Name: test_blogpost_introduction_runner

Project Name: reinforceio/tensorforce

Commit Name: bc3343ccf075e2feef0ca832a48ef2cd16335d99

Time: 2017-07-29

Author: mi.schaarschmidt@gmail.com

File Name: tensorforce/core/baselines/mlp.py

Class Name: MLPBaseline

Method Name: create_tf_operations

Project Name: reinforceio/tensorforce

Commit Name: ad1a625cd2b2dd42701435e6174a98c323be5a3e

Time: 2017-10-16

Author: mi.schaarschmidt@gmail.com

File Name: tensorforce/tests/test_reward_estimation.py

Class Name: TestRewardEstimation

Method Name: test_basic