34726d5612322b79b621e52f7f6fe47a6716eb65,fairseq/distributed_utils.py,,distributed_init,#Any#,53

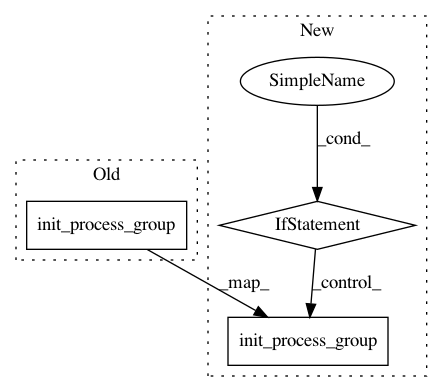

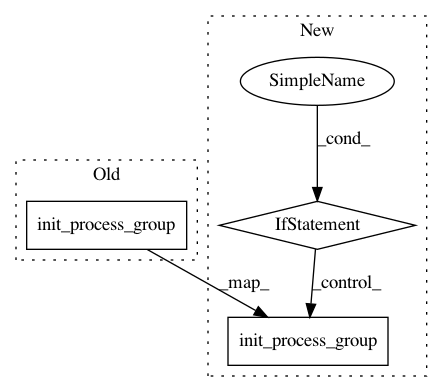

Before Change

print("| distributed init (rank {}): {}".format(

args.distributed_rank, args.distributed_init_method), flush=True)

dist.init_process_group(

backend=args.distributed_backend,

init_method=args.distributed_init_method,

world_size=args.distributed_world_size,

rank=args.distributed_rank,

)

suppress_output(is_master(args))

return args.distributed_rank

After Change

if args.distributed_world_size == 1:

raise ValueError("Cannot initialize distributed with distributed_world_size=1")

if torch.distributed.is_initialized():

warnings.warn("Distributed is already initialized, cannot initialize twice!")

else:

print("| distributed init (rank {}): {}".format(

args.distributed_rank, args.distributed_init_method), flush=True)

dist.init_process_group(

backend=args.distributed_backend,

init_method=args.distributed_init_method,

world_size=args.distributed_world_size,

rank=args.distributed_rank,

)

suppress_output(is_master(args))

args.distributed_rank = torch.distributed.get_rank()

return args.distributed_rank

In pattern: SUPERPATTERN

Frequency: 5

Non-data size: 3

Instances

Project Name: elbayadm/attn2d

Commit Name: 34726d5612322b79b621e52f7f6fe47a6716eb65

Time: 2019-05-02

Author: myleott@fb.com

File Name: fairseq/distributed_utils.py

Class Name:

Method Name: distributed_init

Project Name: allenai/allennlp

Commit Name: f27475aaeba87052e277df83beaadf002f4fd9da

Time: 2020-05-21

Author: dirkg@allenai.org

File Name: allennlp/commands/train.py

Class Name:

Method Name: _train_worker

Project Name: dmlc/dgl

Commit Name: cda0abf75a8ea141b435fddcab9a9611c4c4a487

Time: 2020-07-14

Author: zhengda1936@gmail.com

File Name: examples/pytorch/graphsage/experimental/train_dist.py

Class Name:

Method Name: main

Project Name: pytorch/examples

Commit Name: 10a52b8096178817525a1a87a58f78c5f6fb053e

Time: 2020-12-10

Author: zhanyi@microsoft.com

File Name: distributed/ddp/example.py

Class Name:

Method Name: spmd_main