c87a29c49f488ce290c5942d964bde73c3ff2c91,samples/dqn_expreplay.py,,,#,15

Before Change

test_s = Variable(torch.from_numpy(np.array([env.reset()], dtype=np.float32)))

print(model(test_s))

print(action_selector(model(test_s)))

print(loss_fn(model(test_s), Variable(torch.Tensor([[1.0, 0.0, 2.0]]))))

// TODO: implement env pooling

exp_replay = ExperienceReplayBuffer(env, params, buffer_size=100)

After Change

// populate buffer

exp_replay.populate(50)

// sample batch from buffer

batch = exp_replay.sample(50)

// convert experience batch into training samples

pass

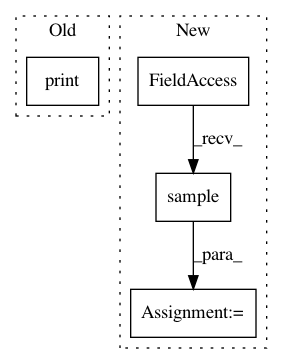

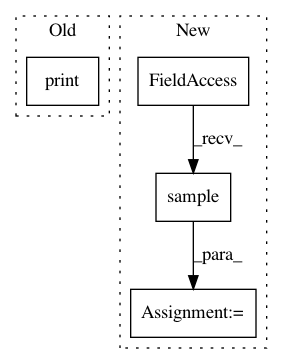

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: Shmuma/ptan

Commit Name: c87a29c49f488ce290c5942d964bde73c3ff2c91

Time: 2017-04-26

Author: maxl@fornova.net

File Name: samples/dqn_expreplay.py

Class Name:

Method Name:

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: ab5f6d6986fc4f1eebc0b5b3c8972daba029954b

Time: 2018-01-18

Author: max.lapan@gmail.com

File Name: ch13/adhoc/wob_clicks.py

Class Name:

Method Name:

Project Name: Shmuma/ptan

Commit Name: 5e63df5e0b53b583b6865cc099cecd5436563113

Time: 2017-06-22

Author: max.lapan@gmail.com

File Name: samples/reinforce.py

Class Name:

Method Name: