87d1b5356187fb4a35aa11204223ed2c6586268d,perfkitbenchmarker/linux_packages/fio.py,,ParseResults,#Any#Any#Any#Any#Any#,141

Before Change

timestamp = time.time()

parameter_metadata = ParseJobFile(job_file)

io_modes = DATA_DIRECTION.values()

for (idx, job) in enumerate(fio_json_result["jobs"]):

job_name = job["jobname"]

parameters = parameter_metadata[job_name]

parameters["fio_job"] = job_name

if base_metadata:

parameters.update(base_metadata)

for mode in io_modes:

if job[mode]["io_bytes"]:

metric_name = "%s:%s" % (job_name, mode)

bw_metadata = {

"bw_min": job[mode]["bw_min"],

"bw_max": job[mode]["bw_max"],

"bw_dev": job[mode]["bw_dev"],

"bw_agg": job[mode]["bw_agg"],

"bw_mean": job[mode]["bw_mean"]}

bw_metadata.update(parameters)

samples.append(

sample.Sample("%s:bandwidth" % metric_name,

job[mode]["bw"],

"KB/s", bw_metadata))

// There is one sample whose metric is "<metric_name>:latency"

// with all of the latency statistics in its metadata, and then

// a bunch of samples whose metrics are

// "<metric_name>:latency:min" through

// "<metric_name>:latency:p99.99" that hold the individual

// latency numbers as values. This is for historical reasons.

clat_section = job[mode]["clat"]

percentiles = clat_section["percentile"]

lat_statistics = [

("min", clat_section["min"]),

("max", clat_section["max"]),

("mean", clat_section["mean"]),

("stddev", clat_section["stddev"]),

("p1", percentiles["1.000000"]),

("p5", percentiles["5.000000"]),

("p10", percentiles["10.000000"]),

("p20", percentiles["20.000000"]),

("p30", percentiles["30.000000"]),

("p40", percentiles["40.000000"]),

("p50", percentiles["50.000000"]),

("p60", percentiles["60.000000"]),

("p70", percentiles["70.000000"]),

("p80", percentiles["80.000000"]),

("p90", percentiles["90.000000"]),

("p95", percentiles["95.000000"]),

("p99", percentiles["99.000000"]),

("p99.5", percentiles["99.500000"]),

("p99.9", percentiles["99.900000"]),

("p99.95", percentiles["99.950000"]),

("p99.99", percentiles["99.990000"])]

lat_metadata = parameters.copy()

for name, val in lat_statistics:

lat_metadata[name] = val

samples.append(

sample.Sample("%s:latency" % metric_name,

job[mode]["clat"]["mean"],

"usec", lat_metadata, timestamp))

for stat_name, stat_val in lat_statistics:

samples.append(

sample.Sample("%s:latency:%s" % (metric_name, stat_name),

stat_val, "usec", parameters, timestamp))

samples.append(

sample.Sample("%s:iops" % metric_name,

job[mode]["iops"], "", parameters, timestamp))

if log_file_base and bin_vals:

// Parse histograms

hist_file_path = vm_util.PrependTempDir(

"%s_clat_hist.%s.log" % (log_file_base, str(idx + 1)))

samples += _ParseHistogram(

hist_file_path, bin_vals[idx], job_name, parameters)

return samples

After Change

if log_file_base and bin_vals:

// Parse histograms

aggregates = collections.defaultdict(collections.Counter)

for _ in xrange(int(job["job options"]["numjobs"])):

clat_hist_idx += 1

hist_file_path = vm_util.PrependTempDir(

"%s_clat_hist.%s.log" % (log_file_base, str(clat_hist_idx)))

hists = _ParseHistogram(hist_file_path, bin_vals[clat_hist_idx - 1])

for key in hists:

aggregates[key].update(hists[key])

samples += _BuildHistogramSamples(aggregates, job_name, parameters)

return samples

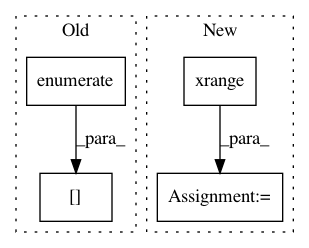

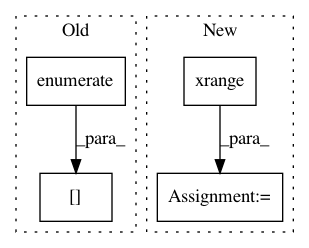

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: GoogleCloudPlatform/PerfKitBenchmarker

Commit Name: 87d1b5356187fb4a35aa11204223ed2c6586268d

Time: 2017-10-04

Author: dlott@users.noreply.github.com

File Name: perfkitbenchmarker/linux_packages/fio.py

Class Name:

Method Name: ParseResults

Project Name: UFAL-DSG/tgen

Commit Name: bebaba130ab074963772235c9b775ad1a7654f6a

Time: 2014-08-26

Author: odusek@ufal.mff.cuni.cz

File Name: tgen/features.py

Class Name:

Method Name: presence

Project Name: AIRLab-POLIMI/mushroom

Commit Name: 2f14c7ca7ccecc9f8a7d1c9cd86b045c6527891c

Time: 2017-06-27

Author: carlo.deramo@gmail.com

File Name: PyPi/approximators/tabular.py

Class Name: Tabular

Method Name: fit