16a31e2c9fedc654e9117b42b8144adf1d0e4900,examples/reinforcement_learning/tutorial_A3C.py,,,#,259

Before Change

import matplotlib.pyplot as plt

plt.plot(GLOBAL_RUNNING_R)

plt.xlabel("episode")

plt.ylabel("global running reward")

plt.savefig("a3c.png")

plt.show()

GLOBAL_AC.save_ckpt()After Change

GLOBAL_AC.save()

plt.plot(GLOBAL_RUNNING_R)

if not os.path.exists("image"):

os.makedirs("image")

plt.savefig(os.path.join("image", "a3c.png"))

if args.test:

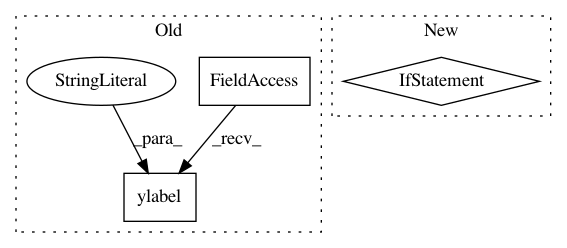

// ============================= EVALUATION =============================In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: tensorlayer/tensorlayer

Commit Name: 16a31e2c9fedc654e9117b42b8144adf1d0e4900

Time: 2020-02-03

Author: 34995488+Tokarev-TT-33@users.noreply.github.com

File Name: examples/reinforcement_learning/tutorial_A3C.py

Class Name:

Method Name:

Project Name: scikit-optimize/scikit-optimize

Commit Name: 4a8d1ffcf12287d632fb25ef484344d68d987818

Time: 2016-07-20

Author: g.louppe@gmail.com

File Name: skopt/plots.py

Class Name:

Method Name: plot_convergence

Project Name: neurodsp-tools/neurodsp

Commit Name: 5196910e726c04648f5cffae5f50ecd3171539ce

Time: 2019-03-17

Author: tdonoghue@ucsd.edu

File Name: neurodsp/plts/filt.py

Class Name:

Method Name: plot_frequency_response