9381164a1f996f4a5533f4834db8dd708a84c6f8,rnns/benchmarks/lstm_variants/lstm.py,LSTM,__init__,#LSTM#Any#Any#Any#Any#Any#,113

Before Change

self.bias = bias

self.dropout = dropout

self.i2h = nn.Linear(input_size, 4 * hidden_size, bias=bias)

self.h2h = nn.Linear(hidden_size, 4 * hidden_size, bias=bias)

self.reset_parameters()

assert(dropout_method.lower() in ["pytorch", "gal", "moon", "semeniuta"])

self.dropout_method = dropout_method

After Change

keep = 1.0 - self.dropout

self.mask = V(th.bernoulli(T(1, self.hidden_size).fill_(keep)))

def reset_parameters(self):

std = 1.0 / math.sqrt(self.hidden_size)

for w in self.parameters():

w.data.uniform_(-std, std)

def forward(self, x, hidden):

do_dropout = self.training and self.dropout > 0.0

h, c = hidden

h = h.view(h.size(1), -1)

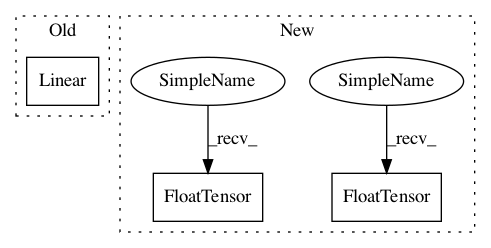

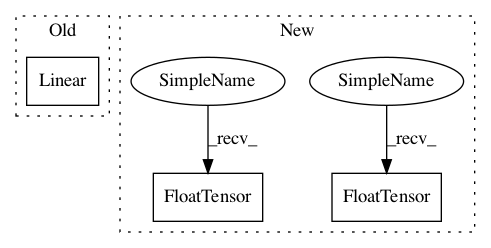

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: pytorch/benchmark

Commit Name: 9381164a1f996f4a5533f4834db8dd708a84c6f8

Time: 2018-05-30

Author: zou3519@gmail.com

File Name: rnns/benchmarks/lstm_variants/lstm.py

Class Name: LSTM

Method Name: __init__

Project Name: jadore801120/attention-is-all-you-need-pytorch

Commit Name: 144902aa6fc57cfa294db487f796dad600210d5e

Time: 2017-06-22

Author: jadore801120@gmail.com

File Name: transformer/SubLayers.py

Class Name: MultiHeadAttention

Method Name: __init__

Project Name: allenai/allennlp

Commit Name: a8f7adae8546cfac4473bd514b0070367d725f2e

Time: 2018-05-13

Author: pradeep.dasigi@gmail.com

File Name: allennlp/models/semantic_parsing/wikitables/wikitables_decoder_step.py

Class Name: WikiTablesDecoderStep

Method Name: __init__

Project Name: pytorch/benchmark

Commit Name: 9381164a1f996f4a5533f4834db8dd708a84c6f8

Time: 2018-05-30

Author: zou3519@gmail.com

File Name: rnns/benchmarks/lstm_variants/lstm.py

Class Name: LSTM

Method Name: __init__