46350367a2cda5204cb37f2289021d14064d2d7d,tf_agents/agents/ddpg/examples/train_eval_mujoco.py,,train_eval,#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#,63

Before Change

target_update_period=target_update_period,

actor_optimizer=tf.train.AdamOptimizer(

learning_rate=actor_learning_rate),

critic_optimizer=tf.train.AdamOptimizer(

learning_rate=critic_learning_rate),

train_batch_size=batch_size,

dqda_clipping=dqda_clipping,

td_errors_loss_fn=td_errors_loss_fn,

After Change

critic_net=critic_net,

actor_optimizer=tf.train.AdamOptimizer(

learning_rate=actor_learning_rate),

critic_optimizer=tf.train.AdamOptimizer(

learning_rate=critic_learning_rate),

ou_stddev=ou_stddev,

ou_damping=ou_damping,

target_update_tau=target_update_tau,

target_update_period=target_update_period,

dqda_clipping=dqda_clipping,

td_errors_loss_fn=td_errors_loss_fn,

gamma=gamma,

reward_scale_factor=reward_scale_factor,

gradient_clipping=gradient_clipping,

debug_summaries=debug_summaries,

summarize_grads_and_vars=summarize_grads_and_vars)

replay_buffer = batched_replay_buffer.BatchedReplayBuffer(

tf_agent.collect_data_spec(),

batch_size=tf_env.batch_size,

max_length=replay_buffer_capacity)

eval_py_policy = py_tf_policy.PyTFPolicy(tf_agent.policy())

train_metrics = [

tf_metrics.NumberOfEpisodes(),

tf_metrics.EnvironmentSteps(),

tf_metrics.AverageReturnMetric(),

tf_metrics.AverageEpisodeLengthMetric(),

]

global_step = tf.train.get_or_create_global_step()

collect_policy = tf_agent.collect_policy()

initial_collect_op = dynamic_step_driver.DynamicStepDriver(

tf_env,

collect_policy,

observers=[replay_buffer.add_batch],

num_steps=initial_collect_steps).run()

collect_op = dynamic_step_driver.DynamicStepDriver(

tf_env,

collect_policy,

observers=[replay_buffer.add_batch] + train_metrics,

num_steps=collect_steps_per_iteration).run()

// Dataset generates trajectories with shape [Bx2x...]

dataset = replay_buffer.as_dataset(

num_parallel_calls=3,

sample_batch_size=batch_size,

num_steps=2,

time_stacked=True).prefetch(3)

iterator = dataset.make_initializable_iterator()

trajectories, unused_ids, unused_probs = iterator.get_next()

train_op = tf_agent.train(

experience=trajectories, train_step_counter=global_step)

train_checkpointer = common_utils.Checkpointer(

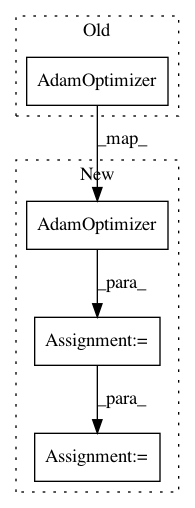

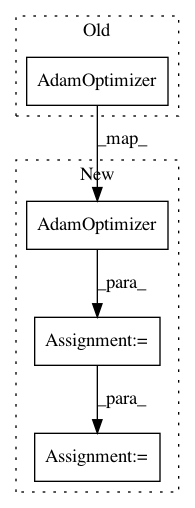

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 4

Instances

Project Name: tensorflow/agents

Commit Name: 46350367a2cda5204cb37f2289021d14064d2d7d

Time: 2018-11-12

Author: kbanoop@google.com

File Name: tf_agents/agents/ddpg/examples/train_eval_mujoco.py

Class Name:

Method Name: train_eval

Project Name: p2irc/deepplantphenomics

Commit Name: c4225216a131206747cdf5ca05cb1d4ef6fa3ac9

Time: 2018-05-22

Author: nicoreekohiggs@gmail.com

File Name: deepplantphenomics/deepplantpheno.py

Class Name: DPPModel

Method Name: __assemble_graph

Project Name: freelunchtheorem/Conditional_Density_Estimation

Commit Name: 3a743eaf8272183950436bee35019b169e87e84b

Time: 2019-05-02

Author: simonboehm@mailbox.org

File Name: cde/density_estimator/NF.py

Class Name: NormalizingFlowEstimator

Method Name: _build_model

Project Name: HyperGAN/HyperGAN

Commit Name: 9d6d46dd83f16ea0df9e084f970cda1ce9132757

Time: 2016-10-22

Author: martyn@255bits.com

File Name: lib/trainers/slowdown_trainer.py

Class Name:

Method Name: initialize