875a33806acea37f602d0ad20fb77cd42432bbb6,scripts/tokenize/tokenize_pad.py,,,#,6

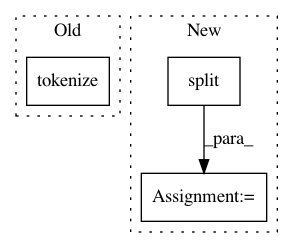

Before Change

rcParams["debug"]=True

wl = Wordlist("pad_data_qlc.qlc")

wl.tokenize("pad_orthography_profile")

wl.output("qlc", filename="tokenized-pad_data")

After Change

print("ID"+"\t"+"ORIGINAL"+"\t"+"RULES")

for line in infile:

line = line.strip()

tokens = line.split("\t")

id = tokens[0]

counterpart = tokens[2]

grapheme_clusters =t.grapheme_clusters(counterpart)

rules = t.rules(grapheme_clusters)

print(id+"\t"+counterpart+"\t"+rules)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: lingpy/lingpy

Commit Name: 875a33806acea37f602d0ad20fb77cd42432bbb6

Time: 2013-11-08

Author: bambooforest@gmail.com

File Name: scripts/tokenize/tokenize_pad.py

Class Name:

Method Name:

Project Name: lingpy/lingpy

Commit Name: 875a33806acea37f602d0ad20fb77cd42432bbb6

Time: 2013-11-08

Author: bambooforest@gmail.com

File Name: scripts/tokenize/tokenize_pad.py

Class Name:

Method Name:

Project Name: gooofy/zamia-speech

Commit Name: 9f376975884e7a0d7a553dcdfa1ab54b66ddbb1f

Time: 2018-12-10

Author: guenter@zamia.org

File Name: speech_editor.py

Class Name:

Method Name:

Project Name: PyThaiNLP/pythainlp

Commit Name: 7adc2ea7ec11cf4376551a9395bccf20d9013f20

Time: 2019-09-01

Author: supaseth@gmail.com

File Name: pythainlp/tokenize/__init__.py

Class Name:

Method Name: sent_tokenize