73844a5b82ca5a3dd9837a3fbed434b06af360bd,thinc/layers/lstm.py,,forward,#Any#Any#Any#,83

Before Change

model: Model[RNNState, RNNState], prevstate_inputs: RNNState, is_train: bool

) -> Tuple[RNNState, Callable]:

(cell_tm1, hidden_tm1), inputs = prevstate_inputs

weights = model.layers[0]

W = weights.get_param("W")

b = weights.get_param("b")

nI = inputs.shape[1]

hiddens, cells, gates = model.ops.lstm(W, b, cell_tm1, hidden_tm1, inputs)

After Change

hiddens += h

cells += c

Y, cells, gates = model.ops.recurrent_lstm(W, b, hiddens, cells, X)

Yp = Padded(Y, Xp.size_at_t, Xp.lengths, Xp.indices)

def backprop(dYp: Padded) -> Padded:

raise NotImplementedError

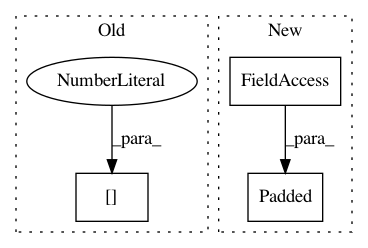

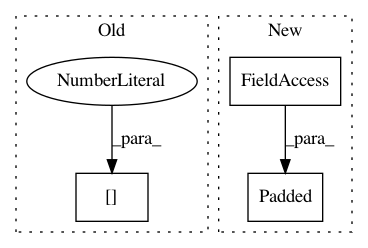

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: explosion/thinc

Commit Name: 73844a5b82ca5a3dd9837a3fbed434b06af360bd

Time: 2020-01-18

Author: honnibal+gh@gmail.com

File Name: thinc/layers/lstm.py

Class Name:

Method Name: forward

Project Name: explosion/thinc

Commit Name: f18edb70716b0262c211f8a8e0f6db531c1b8d55

Time: 2020-01-05

Author: honnibal+gh@gmail.com

File Name: thinc/layers/residual.py

Class Name:

Method Name: forward

Project Name: explosion/thinc

Commit Name: 504166f623fee9ac5004369489c39838c875eab9

Time: 2020-01-04

Author: honnibal+gh@gmail.com

File Name: thinc/layers/bidirectional.py

Class Name:

Method Name: _concatenate