31804a4b46fca8a11e23e22b7d82d10e6cabf582,gpytorch/lazy/cached_cg_lazy_tensor.py,CachedCGLazyTensor,__init__,#CachedCGLazyTensor#Any#Any#Any#,19

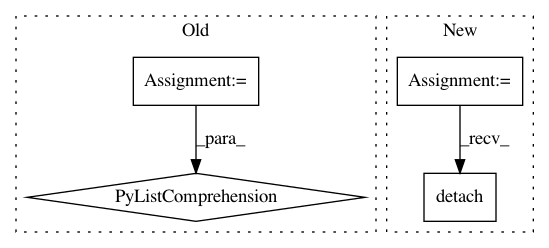

Before Change

// This will make it faster when we reconstruct the LazyTensor inside functions

with torch.no_grad():

if solves is None:

solves = [

base_lazy_tensor._solve(eager_rhs, base_lazy_tensor._preconditioner()[0])

for eager_rhs in eager_rhss

]

super(CachedCGLazyTensor, self).__init__(

base_lazy_tensor, eager_rhss=eager_rhss, solves=solves,

)After Change

all_solves = base_lazy_tensor._solve(torch.cat(eager_rhss, -1), base_lazy_tensor._preconditioner()[0])

solves = []

for eager_rhs in eager_rhss:

solve = all_solves[..., :eager_rhs.size(-1)]

all_solves = all_solves[..., eager_rhs.size(-1):]

solves.append(solve.detach() ) // The detach is necessary here for some reason?

super(CachedCGLazyTensor, self).__init__(

base_lazy_tensor, eager_rhss=eager_rhss, solves=solves,In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: cornellius-gp/gpytorch

Commit Name: 31804a4b46fca8a11e23e22b7d82d10e6cabf582

Time: 2019-01-02

Author: gpleiss@gmail.com

File Name: gpytorch/lazy/cached_cg_lazy_tensor.py

Class Name: CachedCGLazyTensor

Method Name: __init__

Project Name: tyarkoni/pliers

Commit Name: 9f53f2e790fba4a4411224e6255748a45099083a

Time: 2021-01-05

Author: rbrrcc@gmail.com

File Name: pliers/extractors/text.py

Class Name: BertSequenceEncodingExtractor

Method Name: _postprocess

Project Name: OpenNMT/OpenNMT-py

Commit Name: b40c5085bfd8f46a7bfca10b73f91b55a353c918

Time: 2019-01-29

Author: benzurdopeters@gmail.com

File Name: onmt/decoders/decoder.py

Class Name: RNNDecoderBase

Method Name: detach_state