783e415c29c4a4124ea426c824864a32b0f8ee71,src/garage/tf/policies/gaussian_mlp_policy.py,GaussianMLPPolicy,get_action,#GaussianMLPPolicy#Any#,210

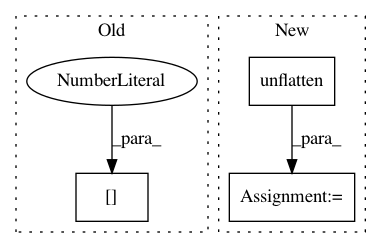

Before Change

@overrides

def get_action(self, observation):

flat_obs = self.observation_space.flatten(observation)

mean, log_std = [x[0] for x in self._f_dist([flat_obs])]

rnd = np.random.normal(size=mean.shape)

action = rnd * np.exp(log_std) + mean

return action, dict(mean=mean, log_std=log_std)

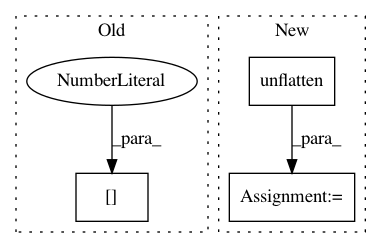

After Change

mean, log_std = self._f_dist([flat_obs])

rnd = np.random.normal(size=mean.shape)

sample = rnd * np.exp(log_std) + mean

sample = self.action_space.unflatten(sample[0])

mean = self.action_space.unflatten(mean[0])

log_std = self.action_space.unflatten(log_std[0])

return sample, dict(mean=mean, log_std=log_std)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: rlworkgroup/garage

Commit Name: 783e415c29c4a4124ea426c824864a32b0f8ee71

Time: 2019-09-05

Author: hegde.nishanth@gmail.com

File Name: src/garage/tf/policies/gaussian_mlp_policy.py

Class Name: GaussianMLPPolicy

Method Name: get_action

Project Name: rlworkgroup/garage

Commit Name: 6c6331df007fb155331c2fac0107b412ab62d943

Time: 2019-09-21

Author: ahtsans@gmail.com

File Name: src/garage/tf/policies/continuous_mlp_policy.py

Class Name: ContinuousMLPPolicy

Method Name: get_action

Project Name: tensorflow/agents

Commit Name: 150442111a9db973dcce7d20fb0cf386cebf60b0

Time: 2019-12-11

Author: ebrevdo@google.com

File Name: tf_agents/agents/ddpg/actor_network.py

Class Name: ActorNetwork

Method Name: call