ff5da2b2bfd03234519927b966923e8baecf6616,slm_lab/agent/net/convnet.py,ConvNet,training_step,#ConvNet#Any#Any#,123

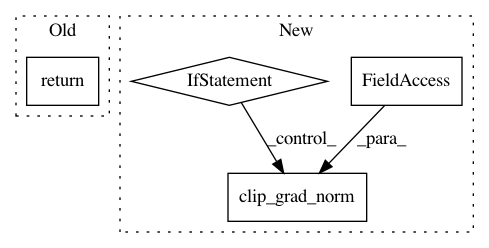

Before Change

"""

Takes a single training step: one forwards and one backwards pass

"""

return super(ConvNet, self).training_step(x, y)

def eval(self, x):

"""

Completes one feedforward step, ensuring net is set to evaluation modelAfter Change

out = self(x)

loss = self.loss_fn(out, y)

loss.backward()

if self.clamp_grad:

torch.nn.utils.clip_grad_norm(

self.conv_model.parameters(), self.clamp_grad_val)

torch.nn.utils.clip_grad_norm(

self.dense_model.parameters(), self.clamp_grad_val)

self.optim.step()

return loss

def wrap_eval(self, x):In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: kengz/SLM-Lab

Commit Name: ff5da2b2bfd03234519927b966923e8baecf6616

Time: 2018-01-02

Author: lgraesser@users.noreply.github.com

File Name: slm_lab/agent/net/convnet.py

Class Name: ConvNet

Method Name: training_step

Project Name: OpenNMT/OpenNMT-py

Commit Name: 57dac10ec6b131842667bf58746168d9e99de9b3

Time: 2017-03-14

Author: bryan.mccann.is@gmail.com

File Name: onmt/Optim.py

Class Name: Optim

Method Name: step

Project Name: pytorch/examples

Commit Name: f5f63fb9c06cd626ff64a31b976e148c92ff99d1

Time: 2017-03-14

Author: bryan.mccann.is@gmail.com

File Name: OpenNMT/onmt/Optim.py

Class Name: Optim

Method Name: step