b5f41f828b0ec9b67fa60aceb0778073d1b368b2,fairseq/distributed_utils.py,,distributed_init,#Any#,71

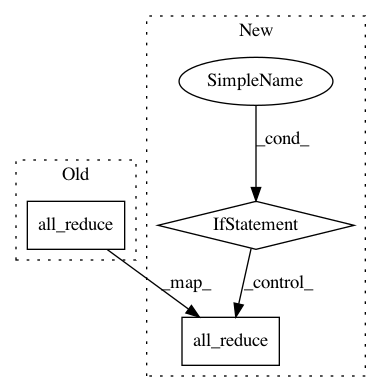

Before Change

socket.gethostname(), args.distributed_rank), flush=True)

// perform a dummy all-reduce to initialize the NCCL communicator

dist.all_reduce(torch.zeros(1).cuda())

suppress_output(is_master(args))

args.distributed_rank = torch.distributed.get_rank()

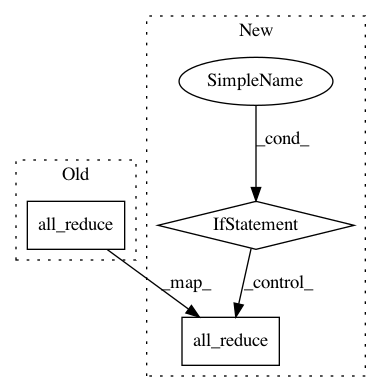

After Change

socket.gethostname(), args.distributed_rank), flush=True)

// perform a dummy all-reduce to initialize the NCCL communicator

if torch.cuda.is_available():

dist.all_reduce(torch.zeros(1).cuda())

else:

dist.all_reduce(torch.zeros(1))

suppress_output(is_master(args))

args.distributed_rank = torch.distributed.get_rank()

return args.distributed_rank

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 3

Instances

Project Name: pytorch/fairseq

Commit Name: b5f41f828b0ec9b67fa60aceb0778073d1b368b2

Time: 2019-10-15

Author: naysing@fb.com

File Name: fairseq/distributed_utils.py

Class Name:

Method Name: distributed_init

Project Name: mapillary/inplace_abn

Commit Name: 36b0375e54cfec84a906236a9f2c6fafaed97cf0

Time: 2018-11-26

Author: samuel@mapillary.com

File Name: train_imagenet.py

Class Name:

Method Name: validate

Project Name: mapillary/inplace_abn

Commit Name: 36b0375e54cfec84a906236a9f2c6fafaed97cf0

Time: 2018-11-26

Author: samuel@mapillary.com

File Name: train_imagenet.py

Class Name:

Method Name: train