b5486aaea994960688152e91fbab7699dc04e8c1,onmt/modules/GlobalAttention.py,GlobalAttention,score,#GlobalAttention#Any#Any#,69

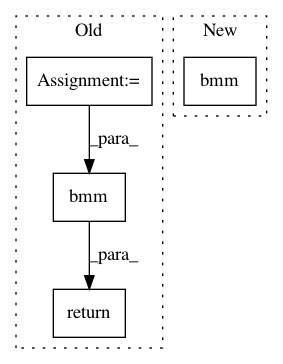

Before Change

if self.attn_type in ["general", "dot"]:

if self.attn_type == "general":

h_t = self.linear_in(h_t)

return torch.bmm(h_s, h_t.unsqueeze(2)).squeeze(2)

else:

// MLP

// batch x 1 x dim

wq = self.linear_query(h_t).unsqueeze(1)

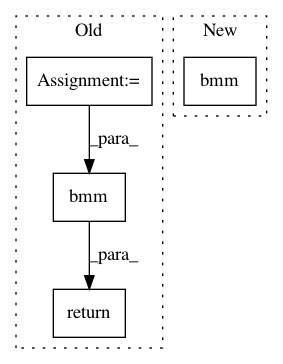

After Change

h_t = h_t_.view(tgt_batch, tgt_len, tgt_dim)

h_s_ = h_s.transpose(1, 2)

// (batch, t_len, d) x (batch, d, s_len) --> (batch, t_len, s_len)

return torch.bmm(h_t, h_s_)

else:

wq = self.linear_query(h_t.view(-1, dim))

wq = wq.view(tgt_batch, tgt_len, 1, dim)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: OpenNMT/OpenNMT-py

Commit Name: b5486aaea994960688152e91fbab7699dc04e8c1

Time: 2017-08-14

Author: taolei@csail.mit.edu

File Name: onmt/modules/GlobalAttention.py

Class Name: GlobalAttention

Method Name: score

Project Name: catalyst-team/catalyst

Commit Name: 8218e76ef1d7978fefceec1d04ce8aed71c2d0d1

Time: 2020-12-30

Author: dimdoroshenko@gmail.com

File Name: catalyst/contrib/nn/modules/arcface.py

Class Name: SubCenterArcFace

Method Name: forward