9c79067ac1768b004ce7a20938d6984a3153f7f5,hypergan/train_hooks/competitive_train_hook.py,CompetitiveTrainHook,gradients,#CompetitiveTrainHook#Any#Any#,108

Before Change

nsteps = self.config.nsteps or 5

d_loss, g_loss = self.gan.loss.sample

d_grads = self.step(0, nsteps, d_grads, d_grads, g_grads, d_loss, g_loss, self.gan.d_vars(), self.gan.g_vars())

g_grads = self.step(0, nsteps, g_grads, g_grads, d_grads, g_loss, d_loss, self.gan.g_vars(), self.gan.d_vars())

return [d_grads, g_grads]

def hvp1(self, grads, x_params, y_params, vs):

After Change

lr = self.config.learn_rate or 1e-4

d_grads = self.step(0, nsteps, d_grads, d_grads, g_grads, d_loss, g_loss, d_params, g_params)

g_grads = self.step(0, nsteps, g_grads, g_grads, d_grads, g_loss, d_loss, g_params, d_params)

if self.config.final_hvp:

hvp = self.hvp_function()

d_grads2 = hvp(g_loss, g_params, d_params, [lr * _g for _g in g_grads])

g_grads2 = hvp(d_loss, d_params, g_params, [lr * _d for _d in d_grads])

d_grads = normalize(d_grads2, d_grads, self.config.normalize)

g_grads = normalize(g_grads2, g_grads, self.config.normalize)

return [d_grads, g_grads]

def hvp_function(self):

hvp = self.hvp

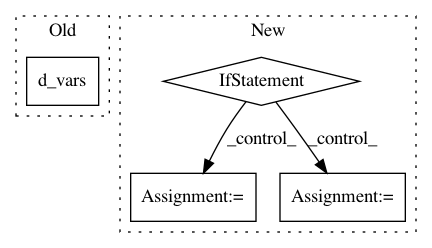

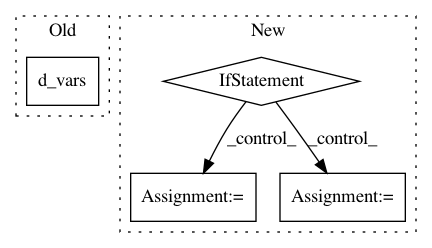

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: HyperGAN/HyperGAN

Commit Name: 9c79067ac1768b004ce7a20938d6984a3153f7f5

Time: 2019-11-19

Author: mikkel@255bits.com

File Name: hypergan/train_hooks/competitive_train_hook.py

Class Name: CompetitiveTrainHook

Method Name: gradients

Project Name: HyperGAN/HyperGAN

Commit Name: a87c284f457e5e9110bfd09f50a53b7466f86867

Time: 2019-01-13

Author: mikkel@255bits.com

File Name: hypergan/train_hooks/gradient_penalty_train_hook.py

Class Name: GradientPenaltyTrainHook

Method Name: __init__

Project Name: HyperGAN/HyperGAN

Commit Name: 40db75c985819b8ad8ccd941729ec0aa90898990

Time: 2018-10-26

Author: martyn@255bits.com

File Name: hypergan/ops/tensorflow/gradient_descent_mirror.py

Class Name: GradientDescentMirrorOptimizer

Method Name: _apply_dense