b1b61d3f90cf795c7b48b6d109db7b7b96fa21ff,src/gluonnlp/model/attention_cell.py,MultiHeadAttentionCell,__init__,#MultiHeadAttentionCell#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#,206

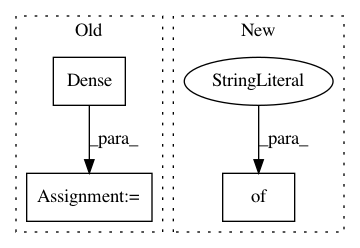

Before Change

self.proj_key = nn.Dense(units=self._key_units, use_bias=self._use_bias,

flatten=False, weight_initializer=weight_initializer,

bias_initializer=bias_initializer, prefix="key_")

self.proj_value = nn.Dense(units=self._value_units, use_bias=self._use_bias,

flatten=False, weight_initializer=weight_initializer,

bias_initializer=bias_initializer, prefix="value_")

def __call__(self, query, key, value=None, mask=None):

Compute the attention.

After Change

self._base_cell = base_cell

self._num_heads = num_heads

self._use_bias = use_bias

units = {"query": query_units, "key": key_units, "value": value_units}

for name, unit in units.items():

if unit % self._num_heads != 0:

raise ValueError(

"In MultiHeadAttetion, the {name}_units should be divided exactly"In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: dmlc/gluon-nlp

Commit Name: b1b61d3f90cf795c7b48b6d109db7b7b96fa21ff

Time: 2019-08-04

Author: lausen@amazon.com

File Name: src/gluonnlp/model/attention_cell.py

Class Name: MultiHeadAttentionCell

Method Name: __init__

Project Name: philipperemy/keras-tcn

Commit Name: 0cfe82c6beb9a28a5ff7da81b86fa0e93c388f14

Time: 2019-11-20

Author: premy@cogent.co.jp

File Name: tasks/save_reload_model.py

Class Name:

Method Name:

Project Name: keras-team/keras

Commit Name: bf4dab3501c62836f94ea17d2f0e198348f5293d

Time: 2016-03-31

Author: francois.chollet@gmail.com

File Name: tests/keras/layers/test_core.py

Class Name:

Method Name: test_dense