0d17019d224c7db47c2370088e35986e2e8c69af,layers/common_layers.py,GravesAttention,forward,#GravesAttention#Any#Any#Any#Any#,407

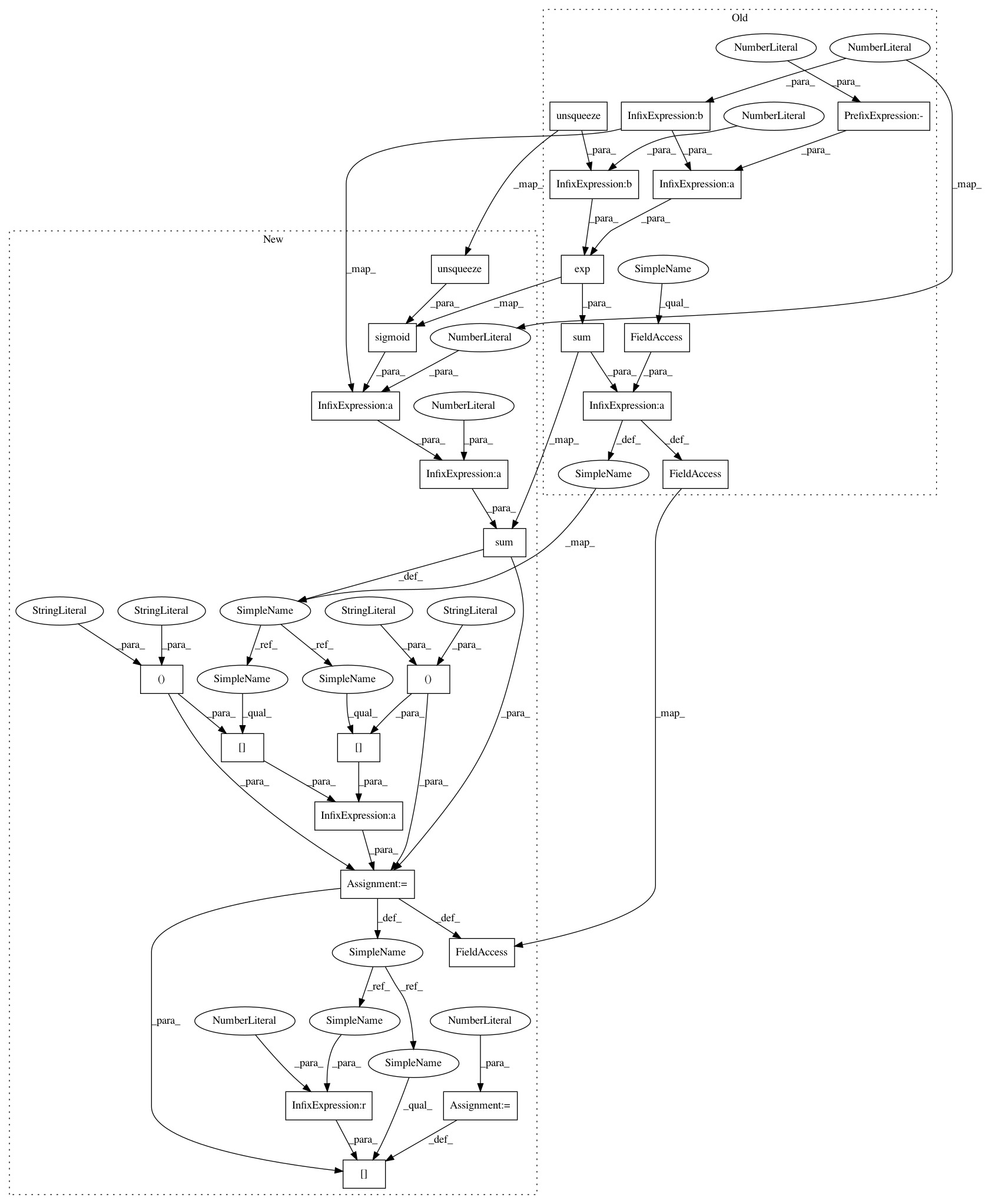

Before Change

j = self.J[:inputs.size(1)]

// attention weights

phi_t = g_t.unsqueeze(-1) * torch.exp(-0.5 * (mu_t.unsqueeze(-1) - j)**2 / (sig_t.unsqueeze(-1)**2))

alpha_t = self.COEF * torch.sum(phi_t, 1)

// apply masking

if mask is not None:

alpha_t.data.masked_fill_(~mask, self._mask_value)

context = torch.bmm(alpha_t.unsqueeze(1), inputs).squeeze(1)

self.attention_weights = alpha_t

self.mu_prev = mu_t

return context

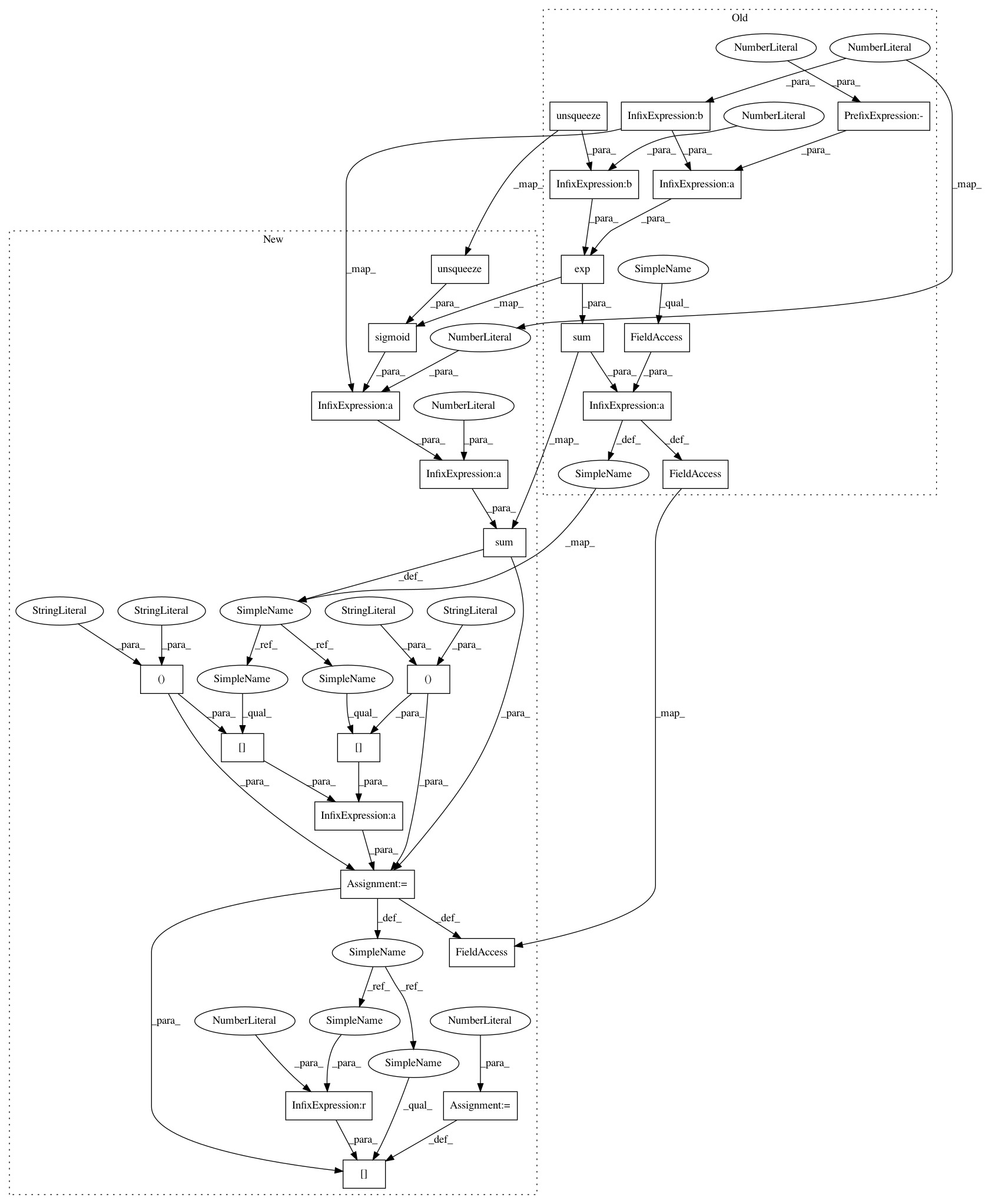

After Change

j = self.J[:inputs.size(1)+1]

// attention weights

phi_t = g_t.unsqueeze(-1) * (1 / (1 + torch.sigmoid((mu_t.unsqueeze(-1) - j) / sig_t.unsqueeze(-1))))

// discritize attention weights

alpha_t = torch.sum(phi_t, 1)

alpha_t = alpha_t[:, 1:] - alpha_t[:, :-1]

alpha_t[alpha_t == 0] = 1e-8

// apply masking

if mask is not None:

alpha_t.data.masked_fill_(~mask, self._mask_value)

context = torch.bmm(alpha_t.unsqueeze(1), inputs).squeeze(1)

self.attention_weights = alpha_t

self.mu_prev = mu_t

return context

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 25

Instances

Project Name: mozilla/TTS

Commit Name: 0d17019d224c7db47c2370088e35986e2e8c69af

Time: 2020-02-19

Author: root@sp-mlc3-5423-0.mlc

File Name: layers/common_layers.py

Class Name: GravesAttention

Method Name: forward

Project Name: mozilla/TTS

Commit Name: 7a616aa9ef85fa4833eff6b78fd8e155a152a002

Time: 2020-01-27

Author: root@sp-mlc3-5423-0.mlc

File Name: layers/common_layers.py

Class Name: GravesAttention

Method Name: forward

Project Name: mozilla/TTS

Commit Name: 0d17019d224c7db47c2370088e35986e2e8c69af

Time: 2020-02-19

Author: root@sp-mlc3-5423-0.mlc

File Name: layers/common_layers.py

Class Name: GravesAttention

Method Name: forward

Project Name: mozilla/TTS

Commit Name: 72817438db4d805754d19dea818e6b4eb0ce425d

Time: 2020-02-19

Author: root@sp-mlc3-5423-0.mlc

File Name: layers/common_layers.py

Class Name: GravesAttention

Method Name: forward