8bafae2ee7044529543768eec63d8460d894f5c6,fairseq/multiprocessing_trainer.py,MultiprocessingTrainer,_async_train_step,#MultiprocessingTrainer#Any#Any#Any#,154

Before Change

loss = 0

if self._sample is not None:

self._sample = self.criterion.prepare(self.model, self._sample)

net_output = self.model(**self._sample["net_input"])

loss_ = self.criterion(net_output, self._sample)

if grad_denom is not None:

loss_ /= grad_denom

loss_.backward()

loss = loss_.data[0]

// flatten grads into a contiguous block of memory

if self.flat_grads is None:After Change

loss_dict = {}

if self._sample is not None:

loss_dict = self.criterion(self.model, self._sample, grad_denom)

loss_dict["loss"].backward()

loss = loss_dict["loss"].data[0]

// flatten grads into a contiguous block of memory

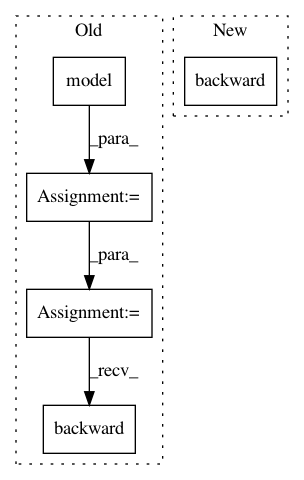

if self.flat_grads is None:In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances Project Name: pytorch/fairseq

Commit Name: 8bafae2ee7044529543768eec63d8460d894f5c6

Time: 2017-10-19

Author: myleott@fb.com

File Name: fairseq/multiprocessing_trainer.py

Class Name: MultiprocessingTrainer

Method Name: _async_train_step

Project Name: rusty1s/pytorch_geometric

Commit Name: 430beec651fe14e4f0f032cbc27e18a9ca415d90

Time: 2018-03-06

Author: matthias.fey@tu-dortmund.de

File Name: examples/cora.py

Class Name:

Method Name: train