eee9f1bbdaa88fa4fde7c3c8096a656804baf9e1,sumy/nlp/tokenizers.py,Tokenizer,to_words,#Tokenizer#Any#,34

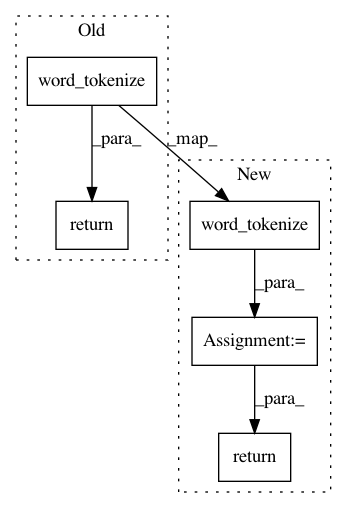

Before Change

return tuple(map(unicode.strip, sentences))

def to_words(self, sentence):

return nltk.word_tokenize(to_unicode(sentence))

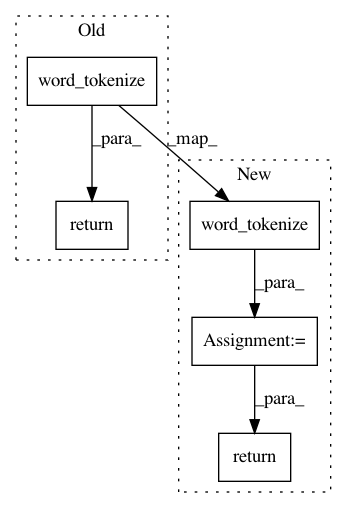

After Change

return tuple(map(unicode.strip, sentences))

def to_words(self, sentence):

words = nltk.word_tokenize(to_unicode(sentence))

return tuple(filter(self._is_word, words))

def _is_word(self, word):

return bool(Tokenizer._WORD_PATTERN.search(word))

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: miso-belica/sumy

Commit Name: eee9f1bbdaa88fa4fde7c3c8096a656804baf9e1

Time: 2013-05-21

Author: miso.belica@gmail.com

File Name: sumy/nlp/tokenizers.py

Class Name: Tokenizer

Method Name: to_words

Project Name: inspirehep/magpie

Commit Name: 57356fdfbb6f608da7662191e4634121cc31d117

Time: 2016-01-12

Author: jan.stypka@cern.ch

File Name: magpie/base/document.py

Class Name: Document

Method Name: get_all_words

Project Name: allenai/allennlp

Commit Name: 2c4a6e537126f4123de7c97f30587310d3712c06

Time: 2017-09-13

Author: mattg@allenai.org

File Name: allennlp/data/tokenizers/word_splitter.py

Class Name: NltkWordSplitter

Method Name: split_words