dedc529d98ff3a6c82ffdf3baddb9a9edf569dc4,ch06/01_dqn_pong.py,,,#,207

Before Change

writer = SummaryWriter(comment="-pong")

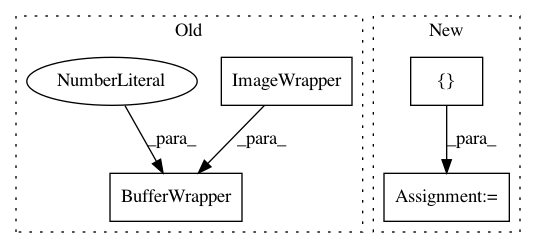

env = BufferWrapper(ImageWrapper(gym.make("PongNoFrameskip-v4")), n_steps=4)

test_env = BufferWrapper(ImageWrapper( gym.make("PongNoFrameskip-v4")), n_steps=4)

// test_env = gym.wrappers.Monitor(test_env, "records", force=True)

net = DQN(env.observation_space.shape, env.action_space.n)

tgt_net = TargetNet(net, cuda=args.cuda)After Change

net.cuda()

print("Populate buffer with %d steps" % REPLAY_START_SIZE)

episode_rewards = []

for _ in range(REPLAY_START_SIZE):

rw = agent.play_step(None, epsilon=1.0)

if rw is not None:

episode_rewards.append(rw)In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: dedc529d98ff3a6c82ffdf3baddb9a9edf569dc4

Time: 2017-10-19

Author: max.lapan@gmail.com

File Name: ch06/01_dqn_pong.py

Class Name:

Method Name:

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: a0113631d7e9d6ec65531457ce03e06c902a4d0b

Time: 2017-10-17

Author: max.lapan@gmail.com

File Name: ch06/01_dqn_pong.py

Class Name:

Method Name:

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 3bb0fd78af4f9dbddf7c01dde71844a283099650

Time: 2017-10-16

Author: max.lapan@gmail.com

File Name: ch06/01_dqn_pong.py

Class Name:

Method Name: