f2754e0656d8cebe1f785f6af100e4ade241a7f8,thinc/model.py,Model,finish_update,#Model#Any#,371

Before Change

// Kind of ugly to use the _mem.weights -- would make more sense

// to call node.finish_update. Maybe we could pass in a set

// of visited?

optimizer(node._mem.weights, node._mem.gradient, key=node.id)

seen.add(node.id)

for shim in node.shims:

shim.finish_update(optimizer)

After Change

for node in self.walk():

for name in node.param_names:

if node.has_grad(name):

param = node.get_param(name)

grad = node.get_grad(name)

param, grad = optimizer(param, grad, key=(node.id, name))

node.set_param(name, param)

node.set_grad(name, grad)

for shim in node.shims:

shim.finish_update(optimizer)

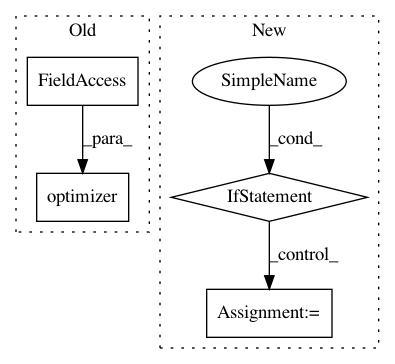

@contextlib.contextmanagerIn pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: explosion/thinc

Commit Name: f2754e0656d8cebe1f785f6af100e4ade241a7f8

Time: 2020-01-14

Author: honnibal+gh@gmail.com

File Name: thinc/model.py

Class Name: Model

Method Name: finish_update

Project Name: tensorflow/lattice

Commit Name: 2fd542242f2dcf1820b616461d2801102c36957e

Time: 2018-07-30

Author: no-reply@google.com

File Name: tensorflow_lattice/python/estimators/calibrated.py

Class Name:

Method Name: _get_optimizer

Project Name: ncullen93/torchsample

Commit Name: 7b460340070c810de6d0f6288f6c8900c93e6cfa

Time: 2017-04-22

Author: ncullen@Nicks-MacBook-Pro.local

File Name: torchsample/modules/super_module.py

Class Name: SuperModule

Method Name: set_optimizer