aab3902d4a7d55f5a86058854adc36b8a12c873f,catalyst/dl/callbacks/base.py,OptimizerCallback,on_batch_end,#OptimizerCallback#Any#,202

Before Change

scaled_loss.backward()

master_params = list(optimizer.param_groups[0]["params"])

model_params = list(

filter(lambda p: p.requires_grad, model.parameters())

)

copy_grads(source=model_params, target=master_params)

for param in master_params:

param.grad.data.mul_(1. / self.fp16_grad_scale)

self.grad_step(After Change

def on_batch_end(self, state):

loss = state.get_key(key="loss", inner_key=self.loss_key)

if isinstance(loss, dict):

loss = list(loss.values())

if isinstance(loss, list):

loss = torch.mean(torch.stack(loss))

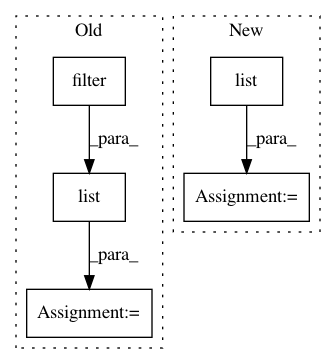

if self.prefix is not None:In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances Project Name: Scitator/catalyst

Commit Name: aab3902d4a7d55f5a86058854adc36b8a12c873f

Time: 2019-05-20

Author: ekhvedchenya@gmail.com

File Name: catalyst/dl/callbacks/base.py

Class Name: OptimizerCallback

Method Name: on_batch_end

Project Name: deepchem/deepchem

Commit Name: b558d6e423f8ba97141f0c865c54e11b6a40b65e

Time: 2021-01-29

Author: m.grabski@sygnaturediscovery.com

File Name: deepchem/feat/tests/test_molgan_featurizer.py

Class Name: TestMolganFeaturizer

Method Name: test_featurizer_smiles