e1861863b2248e4d7d73c12a152e8c035301095d,pytext/torchscript/module.py,ScriptPyTextTwoTowerEmbeddingModule,make_batch,#ScriptPyTextTwoTowerEmbeddingModule#Any#Any#,752

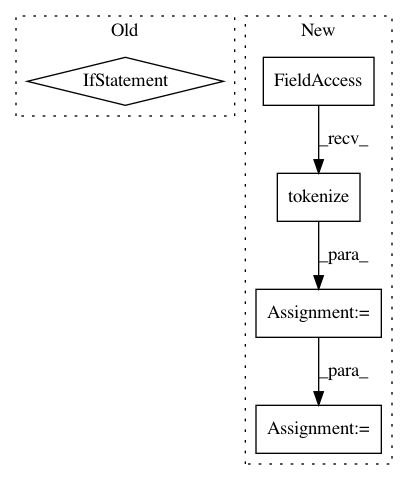

Before Change

// The next lines performs the following function, which is not supported by TorchScript

// sorted_mega_batch = sorted(mega_batch,key=lambda element : len(element[argno]))

mega_batch_class_list = [

PytextTwoTowerEmbeddingModuleBatchSort(x, argno) for x in mega_batch

]

sorted_mega_batch_class_list = sorted(mega_batch_class_list)

sorted_mega_batch = [x.be() for x in sorted_mega_batch_class_list]After Change

// The next lines sort all cross-request batch elements by the token length of right_.

// Note that cross-request batch element can in turn be a client batch.

mega_batch_key_list = [

(max_tokens(self.right_tensorizer.tokenize(x[0], x[2])), n)

for (n, x) in enumerate(mega_batch)

]

sorted_mega_batch_key_list = sorted(mega_batch_key_list)

sorted_mega_batch = [mega_batch[n] for (key, n) in sorted_mega_batch_key_list]

// TBD: allow model server to specify batch size in goals dictionary

max_bs: int = 10

len_mb = len(mega_batch)In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances Project Name: facebookresearch/pytext

Commit Name: e1861863b2248e4d7d73c12a152e8c035301095d

Time: 2020-10-30

Author: mikekg@fb.com

File Name: pytext/torchscript/module.py

Class Name: ScriptPyTextTwoTowerEmbeddingModule

Method Name: make_batch

Project Name: facebookresearch/pytext

Commit Name: e1861863b2248e4d7d73c12a152e8c035301095d

Time: 2020-10-30

Author: mikekg@fb.com

File Name: pytext/torchscript/module.py

Class Name: ScriptPyTextEmbeddingModule

Method Name: make_batch

Project Name: sobhe/hazm

Commit Name: 39897f7d90cecfac7cb91d23a56cd693429e31f3

Time: 2017-09-29

Author: az.nourian@gmail.com

File Name: hazm/Normalizer.py

Class Name: Normalizer

Method Name: normalize