bb1b1c6e27b273a66135d02a04232835d5bfc3ca,allennlp/data/tokenizers/pretrained_transformer_tokenizer.py,PretrainedTransformerTokenizer,tokenize,#PretrainedTransformerTokenizer#Any#,46

Before Change

def tokenize(self, text: str) -> List[Token]:

// TODO(mattg): track character offsets. Might be too challenging to do it here, given that

// ``transformers``` is dealing with the whitespace...

token_strings = self._start_tokens + self._tokenizer.tokenize(text) + self._end_tokens

return [Token(t) for t in token_strings]

def _guess_start_and_end_token_defaults(model_name: str) -> Tuple[List[str], List[str]]:

if "bert" in model_name:After Change

This method only handels a single sentence (or sequence) of text.

Refer to the ``tokenize_sentence_pair`` method if you have a sentence pair.

return self._tokenize(text)

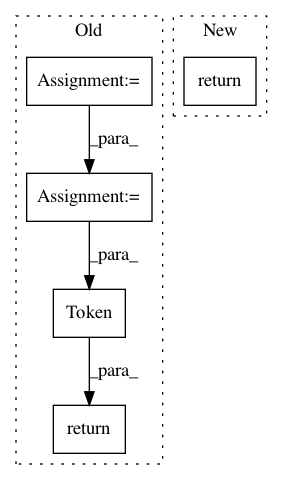

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances Project Name: allenai/allennlp

Commit Name: bb1b1c6e27b273a66135d02a04232835d5bfc3ca

Time: 2019-11-19

Author: max.del.edu@gmail.com

File Name: allennlp/data/tokenizers/pretrained_transformer_tokenizer.py

Class Name: PretrainedTransformerTokenizer

Method Name: tokenize

Project Name: snipsco/snips-nlu

Commit Name: d264e82050700d9aaed31c11dbd65f9dbd03e4d9

Time: 2017-04-25

Author: adrien.ball@snips.net

File Name: snips_nlu/tokenization.py

Class Name:

Method Name: tokenize

Project Name: snipsco/snips-nlu

Commit Name: 756b25abd8925069f7bc06cf5f91b9662737fde0

Time: 2018-10-02

Author: adrien.ball@snips.ai

File Name: snips_nlu/slot_filler/feature_factory.py

Class Name: CustomEntityMatchFactory

Method Name: _transform