4ec6447563c382045014a28d1fb44707f21022b4,horovod/tensorflow/gradient_aggregation_eager.py,LocalGradientAggregationHelperEager,compute_gradients,#LocalGradientAggregationHelperEager#Any#,45

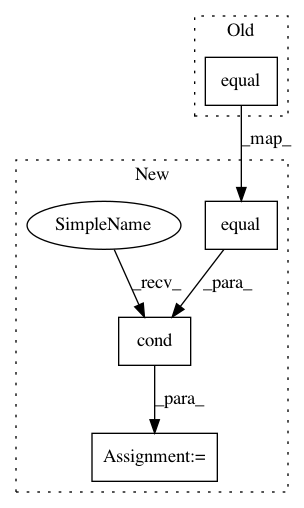

Before Change

// Increment counter.

self.counter.assign_add(1)

if tf.equal(self.counter, self.backward_passes_per_step) :

// Performs allreduce. If `average_aggregated_gradients` is

// set to True divides result by `backward_passes_per_step`.

resulting_grads = self._allreduce_helper(resulting_grads)After Change

def _do_nothing(aggregated_gradients):

return aggregated_gradients

resulting_grads = tf.cond(

pred=tf.equal(self.counter, self.backward_passes_per_step),

true_fn=lambda: _all_reduce_and_clear_aggregated_variables(resulting_grads),

false_fn=lambda: _do_nothing(resulting_grads),

)

return resulting_grads

def _allreduce_helper(self, grads):In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: horovod/horovod

Commit Name: 4ec6447563c382045014a28d1fb44707f21022b4

Time: 2020-10-21

Author: aaron@determined.ai

File Name: horovod/tensorflow/gradient_aggregation_eager.py

Class Name: LocalGradientAggregationHelperEager

Method Name: compute_gradients

Project Name: galeone/dynamic-training-bench

Commit Name: 030f2785177566814a69e392337b13f48833a187

Time: 2016-10-27

Author: nessuno@nerdz.eu

File Name: train_decaying_keep_prob.py

Class Name:

Method Name: keep_prob_decay

Project Name: OpenNMT/OpenNMT-tf

Commit Name: 9149264ad72e24003b76abd1995fe9719e36f4cc

Time: 2019-02-15

Author: guillaume.klein@systrangroup.com

File Name: opennmt/layers/transformer.py

Class Name: MultiHeadAttention

Method Name: call