2d21747abdbf1968a9d565a4090d5d6297ade71a,encoding/functions/syncbn.py,_sum_square,forward,#Any#Any#,25

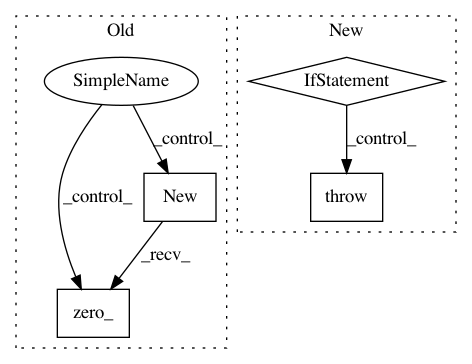

Before Change

ctx.save_for_backward(input)

C = input.size(1)

with torch.cuda.device_of(input):

xsum = input.new().resize_(C).zero_()

xsquare = input.new().resize_(C).zero_()

if isinstance(input, torch.cuda.FloatTensor):

with torch.cuda.device_of(input):

encoding_lib.Encoding_Float_sum_square_Forward(After Change

@staticmethod

def forward(ctx, input):

ctx.save_for_backward(input)

if input.is_cuda:

xsum, xsqusum = lib.gpu.sumsquare_forward(input)

else:

raise NotImplemented

return xsum, xsqusum

@staticmethod

def backward(ctx, gradSum, gradSquare):In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: zhanghang1989/PyTorch-Encoding

Commit Name: 2d21747abdbf1968a9d565a4090d5d6297ade71a

Time: 2018-06-04

Author: zhang.hang@rutgers.edu

File Name: encoding/functions/syncbn.py

Class Name: _sum_square

Method Name: forward

Project Name: zhanghang1989/PyTorch-Encoding

Commit Name: 2d21747abdbf1968a9d565a4090d5d6297ade71a

Time: 2018-06-04

Author: zhang.hang@rutgers.edu

File Name: encoding/functions/syncbn.py

Class Name: _sum_square

Method Name: backward

Project Name: kymatio/kymatio

Commit Name: d51512dcf397a6b6a70fe46885f1b5acd45205b3

Time: 2018-11-21

Author: edouard.oyallon@centralesupelec.fr

File Name: scattering/scattering2d/utils.py

Class Name: Modulus

Method Name: __call__