4c3f59b70e98acf5bca2c1c26ebee5a018be0de4,torch/quantization/fx/quantization_patterns.py,Add,convert,#Add#Any#Any#Any#Any#Any#,92

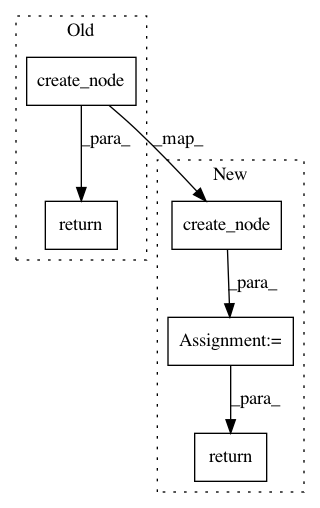

Before Change

else:

op = torch.ops.quantized.add

kwargs = {**self.add_node.kwargs, "scale": scale, "zero_point": zero_point}

return quantizer.quantized_graph.create_node(

"call_function", op, load_arg(quantized=True)(self.add_node.args), kwargs)

// TODO: merge with Add

@register_quant_pattern(operator.mul)

@register_quant_pattern(torch.mul)

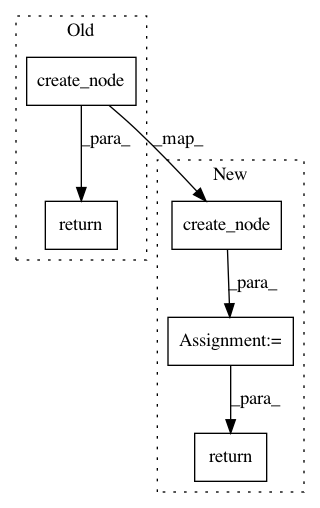

After Change

op = torch.ops.quantized.add

kwargs = {**self.add_node.kwargs}

add_args = (*load_arg(quantized=True)(self.add_node.args), scale_arg, zero_point_arg)

op = quantizer.quantized_graph.create_node(

"call_function", op, add_args, kwargs)

return op

// TODO: merge with Add

@register_quant_pattern(operator.mul)

@register_quant_pattern(torch.mul)

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 5

Instances

Project Name: pytorch/pytorch

Commit Name: 4c3f59b70e98acf5bca2c1c26ebee5a018be0de4

Time: 2021-01-28

Author: supriyar@fb.com

File Name: torch/quantization/fx/quantization_patterns.py

Class Name: Add

Method Name: convert

Project Name: pytorch/pytorch

Commit Name: 916af892b3e7bfa43cab877ee3f2fd7a50f576a8

Time: 2021-01-28

Author: supriyar@fb.com

File Name: torch/quantization/fx/quantization_patterns.py

Class Name: LinearReLUQuantizeHandler

Method Name: convert

Project Name: pytorch/pytorch

Commit Name: 916af892b3e7bfa43cab877ee3f2fd7a50f576a8

Time: 2021-01-28

Author: supriyar@fb.com

File Name: torch/quantization/fx/quantization_patterns.py

Class Name: ConvRelu

Method Name: convert

Project Name: pytorch/pytorch

Commit Name: 0818dbf49db7df046ddcf6e4335feebefb34d3ad

Time: 2021-02-27

Author: jerryzh@fb.com

File Name: torch/quantization/fx/quantization_patterns.py

Class Name: Mul

Method Name: convert