a19f102c65b943a668616df5f3b46cfb4376e04c,allennlp/modules/augmented_lstm.py,AugmentedLstm,forward,#AugmentedLstm#Any#Any#,69

Before Change

output_accumulator = Variable(torch.zeros([batch_size, total_timesteps, self.hidden_size]))

if initial_state is None:

full_batch_previous_memory = Variable(torch.zeros([batch_size, self.hidden_size]))

full_batch_previous_state = Variable(torch.zeros([batch_size, self.hidden_size]))

else:

full_batch_previous_state = initial_state[0].squeeze(0)

full_batch_previous_memory = initial_state[1].squeeze(0)

After Change

output_accumulator = Variable(sequence_tensor.data.new()

.resize_(batch_size, total_timesteps, self.hidden_size).fill_(0))

if initial_state is None:

full_batch_previous_memory = Variable(sequence_tensor.data.new()

.resize_(batch_size, self.hidden_size).fill_(0))

full_batch_previous_state = Variable(sequence_tensor.data.new()

.resize_(batch_size, self.hidden_size).fill_(0))

else:

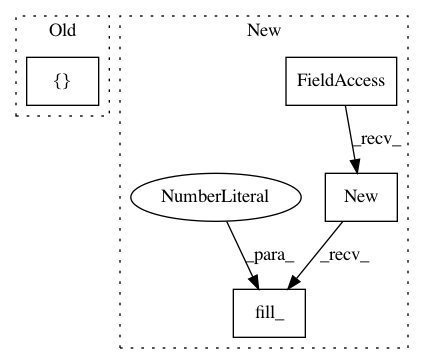

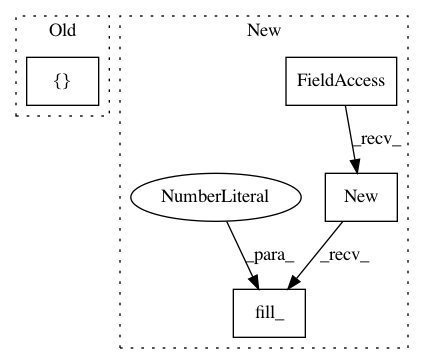

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: allenai/allennlp

Commit Name: a19f102c65b943a668616df5f3b46cfb4376e04c

Time: 2017-08-07

Author: markn@allenai.org

File Name: allennlp/modules/augmented_lstm.py

Class Name: AugmentedLstm

Method Name: forward

Project Name: rusty1s/pytorch_geometric

Commit Name: 85299abb1ccec56c914337d45f461d2f68e24c2b

Time: 2017-11-18

Author: matthias.fey@tu-dortmund.de

File Name: examples/cora.py

Class Name:

Method Name:

Project Name: allenai/allennlp

Commit Name: a2878a883280dc0525b57ae800d7b3c719f6046c

Time: 2017-10-21

Author: mattg@allenai.org

File Name: allennlp/models/encoder_decoders/simple_seq2seq.py

Class Name: SimpleSeq2Seq

Method Name: forward