50b8edd467b79d7f649e5e1a6c583a4cd8db17ec,framework/Optimizers/GradientBasedOptimizer.py,GradientBasedOptimizer,evaluateGradient,#GradientBasedOptimizer#Any#Any#,181

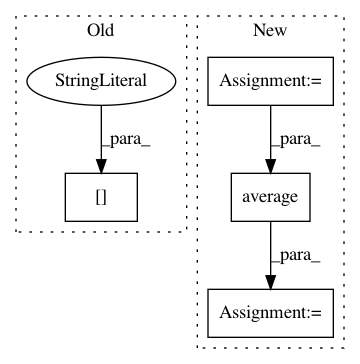

Before Change

opt = optVarsValues[i*2] //the latest opt point

pert = optVarsValues[i*2 + 1] //the perturbed point

//calculate grad(F) wrt each input variable

lossDiff = pert["output"] - opt["output"]

//cover "max" problems

// TODO it would be good to cover this in the base class somehow, but in the previous implementation this

// sign flipping was only called when evaluating the gradient.

if self.optType == "max":After Change

gradArray[var] = np.zeros(2) //why are we initializing to this?

// Evaluate gradient at each point

// first, get average opt point

optOutAvg = np.average(list(optVarsValues[i*2]["output"] for i in range(self.gradDict["numIterForAve"])))

// then, evaluate gradients

for i in range(self.gradDict["numIterForAve"]):

opt = optVarsValues[i*2] //the latest opt point

pert = optVarsValues[i*2 + 1] //the perturbed pointIn pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: idaholab/raven

Commit Name: 50b8edd467b79d7f649e5e1a6c583a4cd8db17ec

Time: 2017-07-16

Author: paul.talbot@inl.gov

File Name: framework/Optimizers/GradientBasedOptimizer.py

Class Name: GradientBasedOptimizer

Method Name: evaluateGradient

Project Name: etal/cnvkit

Commit Name: b1d2e8e320c357fc131a52b0d298ef7b3835fbeb

Time: 2015-05-24

Author: eric.talevich@gmail.com

File Name: cnvlib/core.py

Class Name:

Method Name: get_relative_chrx_cvg

Project Name: ilastik/ilastik

Commit Name: 589442365d133fb993b4ea14b45a9123c6973e46

Time: 2012-09-13

Author: christoph.straehle@iwr.uni-heidelberg.de

File Name: lazyflow/operators/obsolete/classifierOperators.py

Class Name: OpPredictRandomForest

Method Name: execute