2784339dddeecd94dbab074140ba8e478b42f653,opennmt/decoders/self_attention_decoder.py,SelfAttentionDecoder,_run,#SelfAttentionDecoder#Any#Any#Any#Any#Any#Any#Any#,87

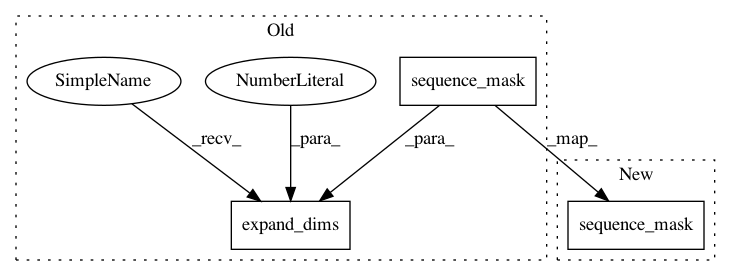

Before Change

memory_sequence_length = (memory_sequence_length,)

memory_mask = []

for mem, mem_length in zip(memory, memory_sequence_length):

mem_mask = tf.sequence_mask(mem_length, maxlen=tf.shape(mem)[1], dtype=tf.float32)

mem_mask = tf.expand_dims(mem_mask, 1)

memory_mask.append(mem_mask)

// Run each layer.

new_cache = []After Change

if not isinstance(memory_sequence_length, (list, tuple)):

memory_sequence_length = (memory_sequence_length,)

memory_mask = [

tf.sequence_mask(mem_length, maxlen=tf.shape(mem)[1])

for mem, mem_length in zip(memory, memory_sequence_length)]

// Run each layer.

new_cache = []In pattern: SUPERPATTERN

Frequency: 5

Non-data size: 3

Instances Project Name: OpenNMT/OpenNMT-tf

Commit Name: 2784339dddeecd94dbab074140ba8e478b42f653

Time: 2019-08-29

Author: guillaume.klein@systrangroup.com

File Name: opennmt/decoders/self_attention_decoder.py

Class Name: SelfAttentionDecoder

Method Name: _run

Project Name: OpenNMT/OpenNMT-tf

Commit Name: 2784339dddeecd94dbab074140ba8e478b42f653

Time: 2019-08-29

Author: guillaume.klein@systrangroup.com

File Name: opennmt/tests/transformer_test.py

Class Name: TransformerTest

Method Name: testMultiHeadAttentionMask

Project Name: OpenNMT/OpenNMT-tf

Commit Name: 2784339dddeecd94dbab074140ba8e478b42f653

Time: 2019-08-29

Author: guillaume.klein@systrangroup.com

File Name: opennmt/tests/transformer_test.py

Class Name: TransformerTest

Method Name: testMultiHeadSelfAttention

Project Name: OpenNMT/OpenNMT-tf

Commit Name: 2784339dddeecd94dbab074140ba8e478b42f653

Time: 2019-08-29

Author: guillaume.klein@systrangroup.com

File Name: opennmt/tests/transformer_test.py

Class Name: TransformerTest

Method Name: testMultiHeadAttentionWithCache

Project Name: OpenNMT/OpenNMT-tf

Commit Name: 2784339dddeecd94dbab074140ba8e478b42f653

Time: 2019-08-29

Author: guillaume.klein@systrangroup.com

File Name: opennmt/tests/transformer_test.py

Class Name: TransformerTest

Method Name: testMultiHeadAttention