47966c5e49342ef9ff53c6db75a4905ffe864e4d,pyannote/audio/models.py,TripletLossSequenceEmbedding,_embedding,#TripletLossSequenceEmbedding#Any#,134

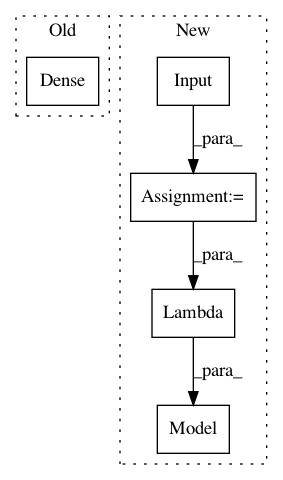

Before Change

model.add(layer)

// stack final dense layer

layer = Dense(self.output_dim, activation="tanh")

model.add(layer)

// stack L2 normalization layer

model.add(Lambda(lambda x: K.l2_normalize(x, axis=-1)))

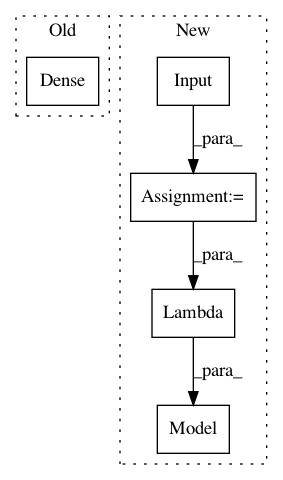

After Change

def _embedding(self, input_shape):

inputs = Input(shape=input_shape)

x = inputs

// stack LSTM layers

n_lstm = len(self.lstm)

for i, output_dim in enumerate(self.lstm):

return_sequences = i+1 < n_lstm

if i:

x = LSTM(output_dim=output_dim,

return_sequences=return_sequences,

activation="tanh")(x)

else:

x = LSTM(input_shape=input_shape,

output_dim=output_dim,

return_sequences=return_sequences,

activation="tanh")(x)

// stack dense layers

for i, output_dim in enumerate(self.dense):

x = Dense(output_dim, activation="tanh")(x)

// stack final dense layer

x = Dense(self.output_dim, activation="tanh")(x)

// stack L2 normalization layer

embeddings = Lambda(lambda x: K.l2_normalize(x, axis=-1))(x)

return Model(input=inputs, output=embeddings)

def _triplet_loss(self, inputs):

p = K.sum(K.square(inputs[0] - inputs[1]), axis=-1, keepdims=True)

n = K.sum(K.square(inputs[0] - inputs[2]), axis=-1, keepdims=True)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: pyannote/pyannote-audio

Commit Name: 47966c5e49342ef9ff53c6db75a4905ffe864e4d

Time: 2016-06-21

Author: bredin@limsi.fr

File Name: pyannote/audio/models.py

Class Name: TripletLossSequenceEmbedding

Method Name: _embedding

Project Name: nl8590687/ASRT_SpeechRecognition

Commit Name: 5f73fe0599380479a37029de1d5647f33aae18c8

Time: 2017-09-04

Author: 3210346136@qq.com

File Name: main.py

Class Name: ModelSpeech

Method Name: CreateModel

Project Name: deepchem/deepchem

Commit Name: b68db1aaf6abe4d2cea8321cc6f1564228dd60f5

Time: 2019-05-31

Author: peastman@stanford.edu

File Name: deepchem/models/tensorgraph/models/seqtoseq.py

Class Name: SeqToSeq

Method Name: _create_encoder