e8b348915f3fe67d79f75c4399606821b413e5a1,sonnet/src/rmsprop.py,ReferenceRMSProp,apply,#ReferenceRMSProp#Any#Any#,202

Before Change

lengths, or have inconsistent types.

optimizer_utils.check_updates_parameters(updates, parameters)

for update, parameter in zip(updates, parameters):

// TODO(petebu): Add support for sparse tensors.

// TODO(petebu): Consider caching learning_rate cast.

// TODO(petebu): Consider the case when all updates are None.

if update is not None:

optimizer_utils.check_same_dtype(update, parameter)

mom, ms, mg = self._get_or_create_moving_vars(parameter)

learning_rate = tf.cast(self.learning_rate, update.dtype.base_dtype)

decay = tf.cast(self.decay, update.dtype.base_dtype)

momentum = tf.cast(self.momentum, update.dtype.base_dtype)

epsilon = tf.cast(self.epsilon, update.dtype.base_dtype)

// TODO(petebu): Use a tf.CriticalSection for the assignments.

ms.assign(tf.square(update) * (1. - decay) + ms * decay)

if self.centered:

mg.assign(update * (1. - decay) + mg * decay)

denominator = ms - mg + epsilon

else:

denominator = ms + epsilon

mom.assign(momentum * mom + (

learning_rate * update * tf.math.rsqrt(denominator)))

parameter.assign_sub(mom)

After Change

optimizer_utils.check_updates_parameters(updates, parameters)

self._initialize(parameters)

for update, parameter, mom, ms, mg in six.moves.zip_longest(

updates, parameters, self.mom, self.ms, self.mg):

// TODO(petebu): Add support for sparse tensors.

// TODO(petebu): Consider caching learning_rate cast.

// TODO(petebu): Consider the case when all updates are None.

if update is not None:

optimizer_utils.check_same_dtype(update, parameter)

learning_rate = tf.cast(self.learning_rate, update.dtype.base_dtype)

decay = tf.cast(self.decay, update.dtype.base_dtype)

momentum = tf.cast(self.momentum, update.dtype.base_dtype)

epsilon = tf.cast(self.epsilon, update.dtype.base_dtype)

// TODO(petebu): Use a tf.CriticalSection for the assignments.

ms.assign(tf.square(update) * (1. - decay) + ms * decay)

if self.centered:

mg.assign(update * (1. - decay) + mg * decay)

denominator = ms - mg + epsilon

else:

denominator = ms + epsilon

mom.assign(momentum * mom + (

learning_rate * update * tf.math.rsqrt(denominator)))

parameter.assign_sub(mom)

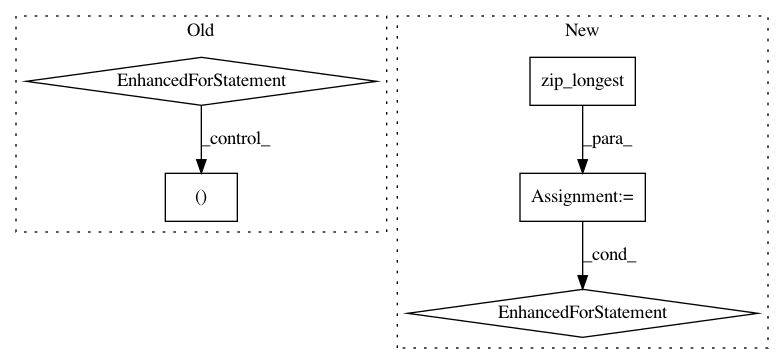

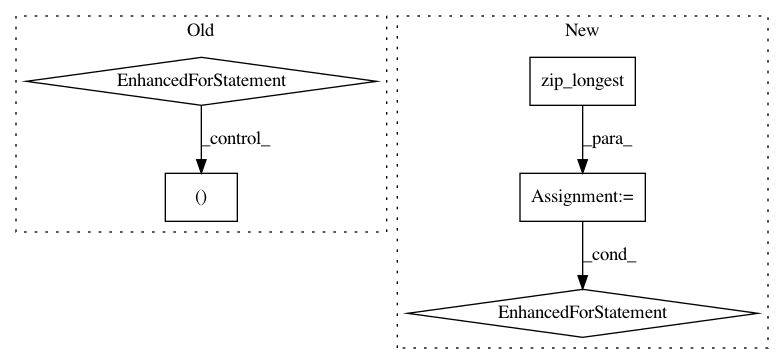

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: deepmind/sonnet

Commit Name: e8b348915f3fe67d79f75c4399606821b413e5a1

Time: 2019-05-31

Author: petebu@google.com

File Name: sonnet/src/rmsprop.py

Class Name: ReferenceRMSProp

Method Name: apply

Project Name: OpenNMT/OpenNMT-py

Commit Name: f6f6ee1df8d619d9816a5296bebca5736fa952bf

Time: 2017-09-21

Author: bpeters@coli.uni-saarland.de

File Name: translate.py

Class Name:

Method Name: main

Project Name: deepmind/sonnet

Commit Name: e8b348915f3fe67d79f75c4399606821b413e5a1

Time: 2019-05-31

Author: petebu@google.com

File Name: sonnet/src/rmsprop.py

Class Name: RMSProp

Method Name: apply