86ce5d52134a56806112ff8664e4034338e0e05a,yarll/agents/ppo/ppo.py,PPO,learn,#PPO#,208

Before Change

}

results = self.session.run(fetches, feed_dict)

if (n_updates % 1000) == 0:

self.writer.add_summary(results[-1], n_updates)

n_updates += 1

self.writer.flush()

if self.config["save_model"]:

After Change

Run learning algorithm

config = self.config

n_updates = 0

with self.writer.as_default():

for _ in range(int(config["n_iter"])):

// Collect trajectories until we get timesteps_per_batch total timesteps

states, actions, advs, rs, _ = self.get_processed_trajectories()

advs = np.array(advs)

advs = (advs - advs.mean()) / advs.std()

self.set_old_to_new()

indices = np.arange(len(states))

for _ in range(int(self.config["n_epochs"])):

np.random.shuffle(indices)

batch_size = int(self.config["batch_size"])

for j in range(0, len(states), batch_size):

batch_indices = indices[j:(j + batch_size)]

batch_states = np.array(states)[batch_indices]

batch_actions = np.array(actions)[batch_indices]

batch_advs = np.array(advs)[batch_indices]

batch_rs = np.array(rs)[batch_indices]

train_actor_loss, train_critic_loss, train_loss = self.train(batch_states,

batch_actions,

batch_advs,

batch_rs)

tf.summary.scalar("model/loss", train_loss, step=n_updates)

tf.summary.scalar("model/actor_loss", train_actor_loss, step=n_updates)

tf.summary.scalar("model/critic_loss", train_critic_loss, step=n_updates)

n_updates += 1

if self.config["save_model"]:

tf.saved_model.save(self.ac_net, os.path.join(self.monitor_path, "model"))

class PPODiscrete(PPO):

def build_networks(self) -> ActorCriticNetwork:

return ActorCriticNetworkDiscrete(

self.env.action_space.n,

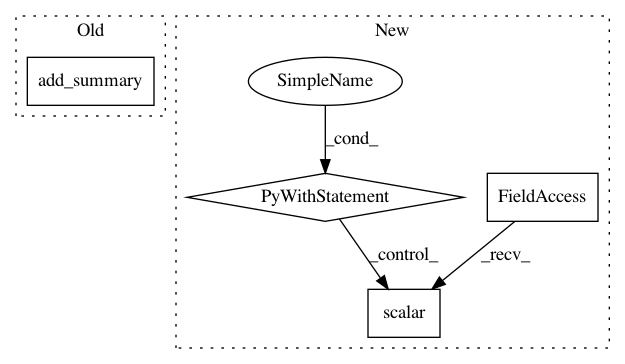

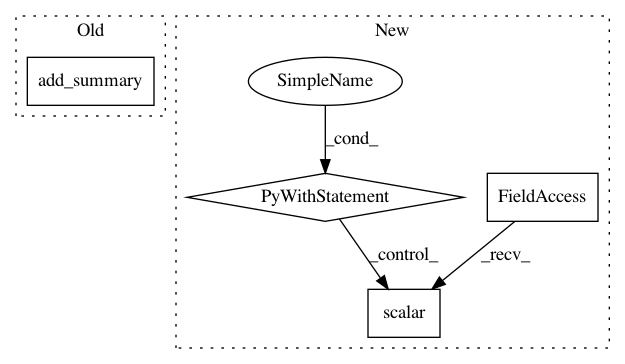

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: arnomoonens/yarll

Commit Name: 86ce5d52134a56806112ff8664e4034338e0e05a

Time: 2019-03-21

Author: arno.moonens@gmail.com

File Name: yarll/agents/ppo/ppo.py

Class Name: PPO

Method Name: learn

Project Name: Kamnitsask/deepmedic

Commit Name: a564a1390f609f0a5083b0865245a6a8238ecf74

Time: 2020-01-04

Author: konstantinos.kamnitsas12@imperial.ac.uk

File Name: deepmedic/logging/tensorboard_logger.py

Class Name: TensorboardLogger

Method Name: add_summary

Project Name: deepchem/deepchem

Commit Name: 6f2393729908116a3528bdbc70f49aa9781b3bf9

Time: 2020-01-27

Author: peastman@stanford.edu

File Name: deepchem/models/keras_model.py

Class Name: KerasModel

Method Name: fit_generator