e3fcbb639e115e8afe9600bd06aee81acfda6704,reagent/training/world_model/seq2reward_trainer.py,Seq2RewardTrainer,get_loss,#Seq2RewardTrainer#Any#,57

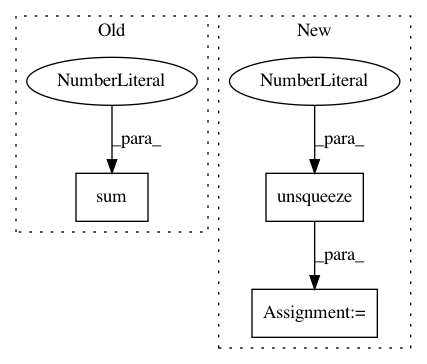

Before Change

gamma_mask = torch.Tensor(

[[gamma ** i for i in range(seq_len)] for _ in range(batch_size)]

).transpose(0, 1)

target_acc_reward = torch .sum(target_rewards * gamma_mask, 0 ).unsqueeze(1)

// make sure the prediction and target tensors have the same size

// the size should both be (BATCH_SIZE, 1) in this case.

assert (After Change

)

target_acc_rewards = torch.cumsum(training_batch.reward * gamma_mask, dim=0)

target_acc_reward = target_acc_rewards[

valid_reward_len - 1, torch.arange(batch_size)

].unsqueeze(1)

// make sure the prediction and target tensors have the same size

// the size should both be (BATCH_SIZE, 1) in this case.

assert (In pattern: SUPERPATTERN

Frequency: 5

Non-data size: 3

Instances Project Name: facebookresearch/Horizon

Commit Name: e3fcbb639e115e8afe9600bd06aee81acfda6704

Time: 2020-10-13

Author: czxttkl@fb.com

File Name: reagent/training/world_model/seq2reward_trainer.py

Class Name: Seq2RewardTrainer

Method Name: get_loss

Project Name: ruotianluo/self-critical.pytorch

Commit Name: adae08c077d07f6da6c95d995d8211cf9e9590e4

Time: 2019-04-11

Author: rluo@ttic.edu

File Name: eval_utils.py

Class Name:

Method Name: eval_split

Project Name: maciejkula/spotlight

Commit Name: 70e4d7fe60a9658bb27b9f5fb67592a1222b2ec3

Time: 2017-07-06

Author: maciej.kula@gmail.com

File Name: spotlight/sequence/representations.py

Class Name: PoolNet

Method Name: user_representation

Project Name: cornellius-gp/gpytorch

Commit Name: f76a4dabb4cd38ee58d01a35c5b511e224d060d2

Time: 2018-09-17

Author: gpleiss@gmail.com

File Name: gpytorch/lazy/sum_batch_lazy_tensor.py

Class Name: SumBatchLazyTensor

Method Name: _matmul